Unreal Engine continues to develop as new code is added and previously written code is changed. What is the inevitable consequence of ongoing development in a project? The emergence of new bugs in the code that a programmer wants to identify as early as possible. One of the ways to reduce the number of errors is the use of a static analyzer like PVS-Studio. Moreover, the analyzer is not only evolving, but also constantly learning to look for new error patterns, some of which we will discuss in this article. If you care about code quality, this article is for you.

This article was prepared by Andrey Karpov; the code fragments were provided by Ilya Ivanov and Sergey Vasiliev from the PVS-Studio team. This article was originally published at Unreal Engine Blog.

Static code analysis is the process of detecting errors and flaws in the source code of programs. Static analysis can be seen as the process of automated code review. Let's speak about code review in more detail.

Code review is one of the oldest, and most useful, methods of detecting defects. It involves joint reading of the source code, and giving recommendations on how to make improvements. This process helps detect errors, or code fragments that may become erroneous in the future. Also, there is a kind of an rule, that the author of the code should not give any explanations as to how a certain part of the program works. The algorithm should be clear just by looking at the text of the program and comments in the code. If this is not so, the code should be modified.

As a rule, code review works quite well, as programmers notice errors in someone else's code much easier than in their own code. You can find more details concerning the methodology of code review in a great book by Steve McConnell, "Code Complete".

The methodology of code review has two disadvantages:

On the one hand, there is a wish to perform the code review regularly. On the other hand, it is too expensive. The compromise is static analysis. Static analysis tools check the source texts of programs and give recommendations to the programmers about reviewing certain code fragments. The analyzers don't get tired, and check the whole code that was affected by the changes in the header files. Of course, a program won't substitute a full-fledged code review, done by a team of developers. However, the ratio benefits/price makes the static analysis quite a useful method, adopted by many companies.

As with any other methodology of error detection, static analysis has its strengths and weaknesses. There is no ideal method of testing programs. The best results can be achieved using a combination of various approaches, such as: a good coding style, static code analysis, dynamic code analysis, unit-testing, regression testing, and so on.

An important advantage of static analysis is in the ability to detect a lot of the errors right after they appear in the code, which means that fixing them won't cost much.

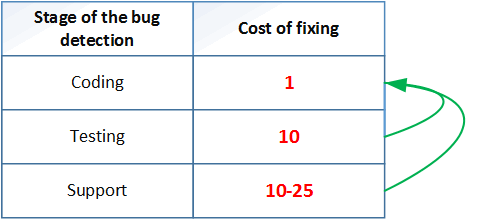

The thing is that the earlier an error is detected, the less expensive it is to correct it. Thus, according to the book "Code Complete" by McConnell, correction of an error at the stage of testing the code is ten times more expensive than at the stage of writing the code:

Table N1. Figure 7 - Average costs of correcting defects depending upon the time of their detection (the data presented in the table are taken from the book 'Code Complete' by S. McConnell)

Static analysis tools allow the detection of a large amount of errors, typical for the stage of writing the code, which significantly reduces the cost of the development of the whole project.

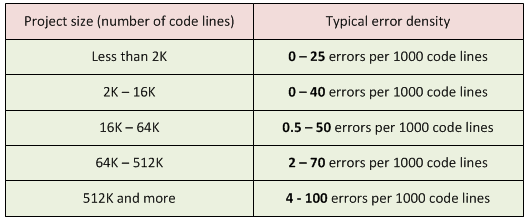

The actuality of static analyzers will grow over the time. This is due to the constant growth of the codebase of modern applications. Programs are becoming larger and more complicated. At the same time, the density of errors depends on code size nonlinearly.

The larger the project, the more errors per 1000 lines of code it contains. Take a look at this chart:

Table 2. The size of the project and typical density of errors. Source: "Program Quality and Programmer Productivity" (Jones, 1977), "Estimating Software Costs" (Jones, 1998).

Let's make graphs, so that we can easier understand the data.

Graph 1. Typical density of errors in the project. Blue - maximum quantity. Red - the average number. Green - the smallest amount of errors.

The graph shows that with the growth of the project, programmers are forced to use more tools that allow the required quality of the project to be kept. It's impossible to do create high quality code in the same way as it was done, let's say, 8 years ago. This can be an unpleasant discovery for a team: it seems that they write the code as usual, but the situation with the code gets worse.

It is necessary to explore new methodologies and tools, otherwise the old technologies may be not enough with the growth of old technologies. One of the most useful methods that is worth using, is static analysis.

If the reader was not familiar with the methodology of static analysis, I hope, I was able to raise interest in that. Here are several links that I suggest to get more details:

Now it is time to move from theory to practice and see how static analysis helps a project such as Unreal Engine.

Our team was again honored to work with the code of Unreal Engine!

Although, we did it two years ago, since that time we got more work to do regards code editing and improvement. It is always useful and interesting to look at the project code base after a two-year break. There are several reasons for this.

First, we were interested to look at false positives from the analyzer. This work helped us improve our tool as well, which would reduce the number of unnecessary messages. Fighting false positives is a constant task for any developer of code analyzers. To those who are willing to read more, I suggest having a look at the article "The way static analyzers fight against false positives, and why they do it".

The codebase of Unreal Engine has significantly changed over the two years. Some fragments were added, some were removed, sometimes entire folders disappeared. That's why not all the parts of the code got sufficient attention, which means that there is some work for PVS-Studio.

I would like to compliment the Epic Games Company for taking good care of their code and using such tools as PVS-Studio. A reader could take that with a smile: "Of course, your team should be praising Epic Games Company, because it is your customer". To be honest, we do have a motive to leave positive feedback about the developers from Epic Games Company. However, I am saying the words of praise with absolute sincerity. The fact that the company uses static analysis tools shows the maturity of the project development cycle, and the care given to ensuring the reliability and safety of the code.

Why am I sure that using PVS-Studio can greatly improve the quality of the code? Because it is one of the most powerful static analyzers, and easily detects errors even in such projects as:

Using PVS-Studio brings the quality of the code to the next level. Doing this, Epic Games Company also cares about all those who use Unreal Engine in their projects. Every detected bug lessens somebody's headache.

I won't be talking about all the errors that we found and fixed, I'll highlight only those that deserve attention, to my mind. Those who are willing, may take a look at other errors in the pull request on GitHub. To access the source code, and a specified pull request, you must have access to the Unreal Engine repository on GitHub. To do this, you must have accounts on GitHub and EpicGames, which must be linked on the website unrealengine.com. After that, you need to accept the invitation to join the Epic Games community on GitHub. Instruction.

The development of PVS-Studio analyzer is not only in the creation of new diagnostics, but also improvement of the existing ones. For example, the algorithms for evaluating possible values of variables are improving all the time. Due to this, the analyzer started detecting errors of this kind over a year ago.

uint8* Data = (uint8*)PointerVal;

if (Data != nullptr || DataLen == 0)

{

NUTDebug::LogHexDump(Data, DataLen);

}

else if (Data == nullptr)

{

Ar.Logf(TEXT("Invalid Data parameter."));

}

else // if (DataLen == 0)

{

Ar.Logf(TEXT("Invalid DataLen parameter."));

}PVS-Studio warning: V547 Expression 'Data == nullptr' is always true. unittestmanager.cpp 1924

If the condition (Data != nullptr || DataLen == 0) is not true, it means that the pointer Data is definintely equal to nullptr. Therefore, the further check (Data == nullptr) makes no sense.

Correct variant of the code:

if (Data != nullptr && DataLen > 0)The diagnostic V547 was written in 2010. However, the mechanism of evaluating the values of variables wasn't perfect, and it didn't allow the finding of this error. The analyzer was confused by the check of the variable value DataLen and it couldn't figure out what the variable values are equal to in various conditions. It is probably not a problem for a human being to analyze such code, but it's not that simple when it comes to writing algorithms to look for such errors.

So, this was a demonstration of the improvement of internal mechanisms of PVS-Studio, which helped detect a new error. These were inner improvements, with the help of which, the analyzer started working more accurately.

We also make "external" improvements by supporting new constructions appearing in the new versions of the C++ language. Still, it's not enough to learn to parse C++11, C++14 and so on. It's equally important to refine old diagnostics, and to implement new diagnostics that will find bugs in new language constructs. As an example, let's consider diagnostic V714 that looks for incorrect range-based loops. In Unreal Engine the V714 diagnostic points to the following loop:

for (TSharedPtr<SWidget> SlateWidget : SlateWidgets)

{

SlateWidget = nullptr;

}PVS-Studio warning: V714 Variable is not passed into foreach loop by a reference, but its value is changed inside of the loop. vreditorradialfloatingui.cpp 170

A programmer wanted to assign the value nullptr to all the elements in the container SlateWidgets. The error is that SlateWidget is a usual local variable that is created during every new iteration of the loop. Assigning a value to this variable does not lead to the changes of the element in the container. We should use a reference so that the code works correctly:

for (TSharedPtr<SWidget> &SlateWidget : SlateWidgets)

{

SlateWidget = nullptr;

}Of course we also add diagnostics which aren't related to the language. For example, the diagnostic V767 didn't exist in 2015 when our team wrote the previous article about the check of Unreal Engine. This diagnostic appeared in PVS-Studio in the version 6.07 (August 8, 2016). Thanks to this diagnostic we detected such an error:

for(int i = 0; i < SelectedObjects.Num(); ++i)

{

UObject* Obj = SelectedObjects[0].Get();

EdObj = Cast<UEditorSkeletonNotifyObj>(Obj);

if(EdObj)

{

break;

}

}PVS-Studio warning: V767 Suspicious access to element of 'SelectedObjects' array by a constant index inside a loop. skeletonnotifydetails.cpp 38

The loop should contain a search of the element that has UEditorSkeletonNotifyObj type. Due to a typo, a numeric literal 0 was written instead of the i variable during the choice of the element.

Correct variant of the code:

UObject* Obj = SelectedObjects[i].Get();Let's take a look at another diagnostic V763 that also appeared in the PVS-Studio 6.07. This bug is quite amusing, but I'll have to cite quite a long body of the RunTest function:

bool FCreateBPTemplateProjectAutomationTests::RunTest(

const FString& Parameters)

{

TSharedPtr<SNewProjectWizard> NewProjectWizard;

NewProjectWizard = SNew(SNewProjectWizard);

TMap<FName, TArray<TSharedPtr<FTemplateItem>> >& Templates =

NewProjectWizard->FindTemplateProjects();

int32 OutMatchedProjectsDesk = 0;

int32 OutCreatedProjectsDesk = 0;

GameProjectAutomationUtils::CreateProjectSet(Templates,

EHardwareClass::Desktop,

EGraphicsPreset::Maximum,

EContentSourceCategory::BlueprintFeature,

false,

OutMatchedProjectsDesk,

OutCreatedProjectsDesk);

int32 OutMatchedProjectsMob = 0;

int32 OutCreatedProjectsMob = 0;

GameProjectAutomationUtils::CreateProjectSet(Templates,

EHardwareClass::Mobile,

EGraphicsPreset::Maximum,

EContentSourceCategory::BlueprintFeature,

false,

OutMatchedProjectsMob,

OutCreatedProjectsMob);

return ( OutMatchedProjectsDesk == OutCreatedProjectsDesk ) &&

( OutMatchedProjectsMob == OutCreatedProjectsMob );

}The following part is the most important:

Then there is a check that the values of these variables meet the condition:

return ( OutMatchedProjectsDesk == OutCreatedProjectsDesk ) &&

( OutMatchedProjectsMob == OutCreatedProjectsMob );Don't look for the errors in the body of the reviewed function, they aren't there. I have given this code to show that the function CreateProjectSet is expected to write the values into two variables, passed as two last factual arguments

The error lurks in the function CreateProjectSet:

static void CreateProjectSet(.... int32 OutCreatedProjects,

int32 OutMatchedProjects)

{

....

OutCreatedProjects = 0;

OutMatchedProjects = 0;

....

OutMatchedProjects++;

....

OutCreatedProjects++;

....

}PVS-Studio will issue two warnings here:

The analyzer is absolutely right when it warns that the values of the arguments OutCreatedProjects and OutMatchedProjects are not used in any way, but are immediately overwritten with 0.

The error is simple: a programmer forgot to pass parameters by reference. Correct variant of the code:

static void CreateProjectSet(.... int32 &OutCreatedProjects,

int32 &OutMatchedProjects)I've given errors that require at least some attentiveness for detection. However, there are a lot more simple and banal errors. For example, missing break statements:

{

case EWidgetBlendMode::Opaque:

ActualBackgroundColor.A = 1.0f;

case EWidgetBlendMode::Masked:

ActualBackgroundColor.A = 0.0f;

}Or, incorrect comparison of several variables for equality:

checkf(GPixelFormats[PixelFormat].BlockSizeX

== GPixelFormats[PixelFormat].BlockSizeY

== GPixelFormats[PixelFormat].BlockSizeZ

== 1,

TEXT("Tried to use compressed format?"));If someone is new to C++ and doesn't get why this comparison is incorrect, I suggest looking at the description of V709 diagnostic.

These errors are the most numerous among those detected by PVS-Studio. But if they look so simple, why are they still unnoticed?

They are so trivial if they are highlighted in the article for a reader. It's really hard to find them in the code of real applications. Even doing the code review, one may look at the code block

{

case EWidgetBlendMode::Opaque:

ActualBackgroundColor.A = 1.0f;

case EWidgetBlendMode::Masked:

ActualBackgroundColor.A = 0.0f;

}and not see any errors. The code looks so simple that a programmer doesn't even try to read it carefully, thinking that it is completely correct.

Now, let's discuss a question: can we reduce the number of errors in any way?

The errors described in the article were found using PVS-Studio, and most likely a reader would expect that I would recommend using static analysis tools. Yes, I do recommend integrating PVS-Studio static analyzer to the development process. There is no need to refuse the possibility of finding several bugs right after writing the code.

However, I would like to discuss a very important point that is usually not mentioned in articles related to code quality.

It is impossible to achieve high quality in a project, until a team of programmers admit that they make mistakes, and sometimes very simple ones.

This phrase sounds very trivially, but it's very important. Until a programmer realizes that this statement refers not to an abstract programmer, but to him personally, no tool or methodology will be useful. In other words, programmers are most often too proud to admit that they need additional tools and methods to write quality code.

All programmers know that there are errors in all programs. Still, they suppose that the rules, recommendations, and tools aren't for them, as they are great professional developers who write bugless code.

This is a problem of level overestimation. An article "The Problem With 'Above Average Programmers" gives a nice explanation of this effect. I'll quote an excerpt:

How would you rate your programming skills? (Below Average, Average, or Above Average)?

Based on psychological studies across many different groups, about 90% of all programmers will answer "Above Average".

Of course, that can't possibly be true. In a group of 100 people, 50 are above average, 50 are below average. This effect is known as illusory superiority. It is described in may spheres, but even if you haven't heard about this, you will most likely answer "above average".

This is a problem that prevents programmers from learning new technology and methodology. My main recommendation is to try reconsidering the attitude towards the work of the team, individuals. The position "I/we write great code" is counterproductive. It's a common thing for people to make mistakes; the same is true for programmers.

By thinking through this, a person can make the biggest step in the direction of high quality software.

Note: I also suggest to the project managers to read this article.

I would like to warn about another reasoning error. Static and dynamic analyzers mainly detect simple bugs and typos. No, they won't find a high-level logic errors, because artificial intelligence is not invented yet. However, a simple error can cause great harm, and take a lot of time/money/effort to repair. Read more: "If the coding bug is banal, it doesn't mean it's not crucial".

And one more thing: don't look for a silver bullet. Use a combination of various elements such as:

I'm not asking that you start using all the methods listed above at once. In different projects, somethings will be more useful, somethings less. The main thing is not to hope for one alone to work, but instead, use a rational combination of techniques. Only this will improve the quality and reliability of the code.

Unreal Engine developers care about the quality of their code, and PVS-Studio team does its best to help them in their endeavors.

The PVS-Studio team is ready to work with the code of your projects as well. Besides providing the license for the tool and further support, we perform code auditing, migration of the code, and so on.

I wish you as few bugs in the programs as possible.

0