In this article I would like to tell about several issues that PVS-Studio developers had to face when working on support of new Visual Studio version. Besides that, I will try to answer a question: why is the support of our C# analyzer, based on a "ready-made solution" (Roslyn, in this case) is in some situations more expensive than our "self-written" C++ analyzer.

With the release of new Visual Studio - 2017, Microsoft provides a large amount of innovations for its "flagship" IDE. These include:

PVS-Studio 6.14, supporting Visual Studio 2017, was released 10 days after the release of the IDE. The work on the support of new support of Visual Studio began much earlier - at the end of the last year. Certainly, not all the innovations in Visual Studio are connected with the work of PVS-Studio, however, the latest release of this IDE turned out to be especially labor intensive in terms of its support in all the components of our product. The most affected was not our "traditional" C++ analyzer (we managed to support the new version of Visual C++ quite fast), but were the components responsible for the interaction with MSBuild and Roslyn platform (that our C# analyzer is based on).

Also the new version of Visual Studio became the first since the moment when we created C# analyzer in PVS-Studio (that we released in parallel with the first release of Roslyn in Visual Studio 2015), and C++ analyzer for Windows was more tightly integrated with MSBuild. Therefore, due to the difficulties encountered when updating these components, the support of the new VS became the most time-consuming in the history of our product.

Chances are, you know that PVS-Studio is a static analyzer for C/C++/C#, running on Windows and Linux. What is inside PVS-Studio? First of all this is, of course, a cross-platform C++ analyzer and also (mostly) cross-platform utilities for its integration into various build systems.

However, the majority of our users on Windows platform use the stack of technologies for the software development by Microsoft, i.e. Visual C++/C#, Visual Studio, MSBuild and so on. For such users we have the means to work with the analyzer from Visual Studio (IDE plugin) and the command line utility to check C++/C#/MSBuild projects. The same utility is used by our VS plugin "under the hood". This utility for analyzing the structure of projects directly utilizes MSBuild API. Our C# analyzer is based on the .NET Compiler Platform (Roslyn), and so far it is only available for Windows users.

So, we see that on Windows platform PVS-Studio uses "native" Microsoft tools for integration into Visual Studio, the analysis of the build system and of C# code. With the release of a new version of Visual Studio, all of these components have also been updated.

Besides the updates of MSBuild and Roslyn, Visual Studio got a number of new features that greatly affected our product. Coincidentally, several of our components ceased working; those were used without any changes for several previous releases of Visual Studio, several of them have been working since Visual Studio 2005 (which we are not supporting anymore). Let's take a closer look at these changes.

A new component-based system of installation, allowing the user to choose only the necessary components, was completely "untied" from the Windows system registry. Theoretically, it made the IDE more "portable" and allowed installing several versions of Visual Studio on one system.

However, this also affected the developers of the extensions, because the whole code that allowed detecting the presence of the IDE or it's individual components, stopped working.

Figure 1 - the new Visual Studio Installer

Now the developers are offered COM interfaces, in particular ISetupConfiguration, to be used for getting the information about the installed versions of Visual Studio. I think many would agree that the convenience of using COM interfaces is not that great as compared to reading from the registry. And if for the C# code, there are wrappers of these interfaces, we have to work quite hard on the adaptation of our installer based on InnoSetup. In the end, Microsoft replaced the use of one Windows-specific technology with another one. In my opinion, the benefit of such a transition is rather questionable, more so because Visual Studio could not fully abandon using the registry. At least in this version.

A more significant consequence of such a transition, besides a quite subjective matter of convenience of use, was that it indirectly influenced the work of MSBuild 15 libraries and their backward compatibility with previous versions of MSBuild. The reason of this is that new version of MSBuild also ceased using the registry. We had to update all the MSBuild components that we use, because Roslyn directly depends on them. I will give more details about the consequences of these changes a little later.

By saying the MSBuild infrastructure for C++, I primarily mean the layer that is responsible for the direct compiler invocation when building Visual C++ projects. This layer is called PlatformToolset in MSBuild and is responsible for the preparation of the execution environment for C++ compiler. The system of PlatformToolsets also provides backward compatibility with the previous versions of Visual C++ compilers. It allows working with the latest version of MSBuild to build projects, which use the previous versions of the visual C++ compiler.

For example you can build a project that uses a C++ compiler from Visual Studio 2015 in MSBuild 15/Visual Studio 2017, if this version of the compiler is installed in the system. This can be rather useful because it allows using the new version of IDE on the project immediately, without prior porting of the project to a new version of the compiler (which is sometimes not a simple task).

PVS-Studio fully supports the PlatformToolsets and uses the "native" MSBuild APIs to prepare the environment of C++ analyzer, allowing the analyzer to check the source code as close to how it is compiled as possible.

Such close integration with MSBuild allowed us to fairly easily support the new versions of C++ compiler from Microsoft. Or, to be more precise, to support its build environment, as the support of new compiler capabilities (for example, new syntax and header files, that use this syntax) are not in the scope of this article. We just added a new PlatformToolset to the list of the supported ones.

In the new version of Visual C++, the procedure of configuring the compiler environment underwent considerable changes which again "broke" our code, that was previously working for all versions starting with Visual Studio 2010. Although, the platform toolsets from the previous compiler versions are still working, we had to write a separate code branch to support the new toolset. Coincidentally (or perhaps not) MSBuild developers also changed the pattern of naming C++ toolsets: v100, v110, v120, v140 for the previous versions and v141 for the latest version (at the same time Visual Studio 2017 is still version 15.0).

In the new version, the structure of vcvars scripts, for which the deployment of the compiler environment relies upon, was changed completely. These scripts set up the environment variables necessary for the compiler, append the PATH variable with paths to the binary directories and system C++ libraries, and so on. The analyzer requires the identical environment, in particular for the preprocessing of the source files before the start of analysis.

It can be said that this new version of deployment scripts is in some way made more "tidy" and most likely it is easier to support and expand it (perhaps, the updating of these scripts was caused by the inclusion of clang as the compiler in the new version of Visual C++), but from the point of view of the developers of C++ analyzer, this added the workload for us.

Together with Visual Studio 2017, there was a release of Roslyn 2.0 and MSBuild 15. It may seem that to support these new versions in PVS-Studio C#, it would be enough to upgrade the NuGet packages in the projects that use them. After that, all the "goodies" of the new versions will become available to our analyzer, such as the support of C# 7.0, new types of .NET Core projects and so on.

Indeed, it was quite easy to update the packages we use and to rebuild the C# analyzer. However, the very first run of the new version on our tests showed that "everything got broken". The further experiments showed that the C# analyzer works correctly only in the system that has Visual Studio 2017/MSBuild 15 installed. It wasn't enough that our distribution kit contains the necessary versions of the Roslyn/MSBuild libraries. The release of the new C# version of the analyzer "as is" would cause the deterioration of the analysis results for the users who work with the previous versions of C# compilers.

When we were creating the first version of C# analyzer that used Roslyn 1.0, we tried to make our analyzer an "independent" solution, not requiring any third-party installed components. The main requirement for the user system is the compilability of the project to be analyzed - if the project can be built, it can be checked by the analyzer. It is obvious that to build Visual C# projects (csproj) on Windows one need to have at least MSBuild and a C# compiler.

We decided to immediately drop the idea to obligate our users to install the latest versions of MSBuild and Visual C# together with the C# analyzer. If the project gets built normally in Visual Studio 2013 (that uses MSBuild 12 its turn), the requirement to install MSBuild 15 will look like a redundant one. We, on the contrary, try to lower the "threshold" to start using our analyzer.

Microsoft web installers turned out to be quite demanding to the size of the necessary downloads - while our distribution is about 50 megabytes, the installer for Visual C++, for instance (that is also necessary for the C++ analyzer) estimated the volume of data for download as 3 Gigabytes. In the end, as we found our later, these components would still be not enough for the fully correct work of the C# analyzer.

When we were only beginning to develop our C# analyzer, we had 2 ways to work with Roslyn platform.

The first one was to use Diagnostics API, which was especially designed for the development of .NET analyzers. This API provides a possibility to implement your own "diagnostics" by inheriting from the abstract DiagnosticAnalyzer class. With the help of CodeFixProvider class, the users could implement the automatic fixing of such warnings.

The absolute advantage of such an approach is the power of the existing infrastructure of Roslyn. The diagnostic rules become available in the Visual Studio code editor and can be applied while editing the code in the IDE, or when running the project rebuild. This approach doesn't oblige the analyzer developer to open project and source files manually - everything will be done in the scope of the "native" compiler, based on Roslyn. If we had chosen this way, we would probably not have had any issues with the updating to the new Roslyn, at least in the form as it is now.

The second option was to implement a completely standalone analyzer, by the analogy with PVS-Studio C++. This variant seemed better to us, as we decided to make the infrastructure of the C# analyzer as close to the existing C/C++ one as possible. This allowed adapting the existing C++ diagnostics rather fast (of course, not all of them, but those that were relevant for C#), as well as more "advanced" analysis methods.

Roslyn provides the facilities necessary for such an approach: we open Visual C# project files ourselves, build syntax trees from the source code and implement our own mechanism for their parsing. It can all be done using MSBuild and Roslyn APIs. Thus, we got the full control over all the phases of analysis, independent of the compiler work or the IDE.

No matter how tempting may seem the "free" integration with the editor of Visual Studio code, we preferred using our own IDE interface, as it provides more abilities than a standard Error List (where such warnings will be issued). The use of Diagnostics API would also limit us to the versions of the compiler which are based on Roslyn, i.e. the ones included in Visual Studio 2015 and 2017, while the standalone analyzer would let us support all the previous versions.

During the creation of the C# analyzer, we saw that Roslyn turned out to be very tightly tied with MSBuild. Of course I am speaking here about the Windows version of Roslyn, because we didn't have a chance to work with Linux version yet, so I cannot say exactly how it is going on there.

I should say right away that Roslyn's API to work with MSBuild projects remains fairly incomplete even in version 2.0. When writing a C# analyzer, we had to use a lot of "duct taping", as Roslyn was doing several things incorrectly (incorrectly means not in the way MSBuild would do it when building the same projects), which naturally led to false positives and errors during the analysis of the source files.

Exactly these close ties of Roslyn and MSBuild led to those issues that we faced before the update to Visual Studio 2017.

For the analyzer to work, we need to get two entities from Roslyn: a syntax tree of the checked code and a semantic model of the tree, i.e the semantics of syntax constructions representing its nodes - the types of class fields, return values, signatures of methods and so on. And if it is enough to have just a source code file to get the syntax tree with Roslyn, then to generate a semantic model of this file, it's necessary to compile a project which it includes.

The update of Roslyn to 2.0 led to the appearance of errors in the semantic model on our tests (V051 analyzer message is pointing to that). Such errors usually manifest in the result of the analysis results as false positive/negative warnings, i.e. a part of useful warnings disappear and wrong warnings appear.

For obtaining a semantic model, Roslyn provides to its users the so-called Workspace API that can open .NET MSBuild projects (in our case it is csproj and vbproj) and get the "compilations" of such projects. In this context, we are going to speak about an object of a helper Compilation class in Roslyn that abstracts the preparation and call of the C# compiler. We can get a semantic model from such a "compilation". Compilation errors lead to the errors in the semantic model.

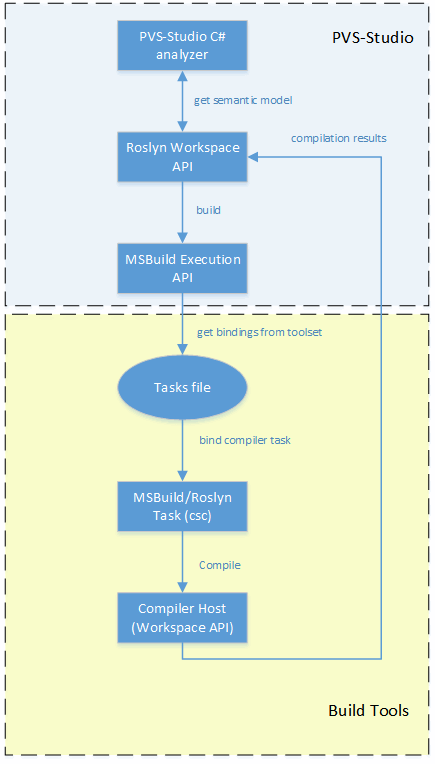

Now let's see how Roslyn interacts with MSBuild to get a "compilation" of a project. Below here is a diagram illustrating the interaction in the simplified form:

Figure 2 - Scheme of the interaction between Roslyn and MSBuild

The chart is divided into 2 segments - PVS-Studio and Build Tools. PVS-Studio segment contains components included in the distribution with our analyzer - MSBuild and Roslyn libraries, implementing the APIs that we utilize. The Build Tools segment includes the infrastructure of the build system that should be present in the system for the correct work of these APIs.

After the analyzer has requested the compilation object from the Workspace API (to get the semantic model), Roslyn starts building the project, or according to MSBuild terminology - perform the build task csc. After starting the build, control passes to MSBuild, which performs all of the preparatory steps in accordance with the build scripts.

It should be noted that this is not a "normal" build (it will not lead to the generation of binary files), but the so-called 'design' mode one. The ultimate goal of this step is for Roslyn to obtain all the information that would be available to the compiler during the "real" build. If the build is tied to the execution of any pre-build steps (for example, start of the scripts to auto-generate some of the source files), all such actions will also be performed by MSBuild, as if it were a normal build.

Having received the control, MSBuild, or the library included with PVS-Studio, to be exact, will start looking for the installed build toolsets in the system. Having found the appropriate toolset, it will try to instantiate the steps from the build scripts. The toolsets correspond to the installed instances of MSBuild present in the system. For example, MSBuild 14 (Visual Studio 2015), installs the toolset 14.0, MSBuild 12-12.0 and so on.

The toolset contains all the standard build scripts of MSBuild projects. The project file (for example, csproj) usually contains just the list of input build files (for example, the files with the source code). The toolset contains all the steps that should be performed over these files: from the compilation and linking to the publishing of the build results. Let's not speak too much about the way MSBuild works; it's just important to understand that one project file and the parser of this project (i.e. that MSBuild library, included in PVS-Studio) are not enough to do a full build.

Let's move to the segment of the Build Tools diagram. We are interested in the build step csc. MSBuild will need to find a library where this step is implemented directly, and the tasks file from the selected toolset will be used for that. A tasks file is an xml file, containing paths to the libraries, implementing standard build tasks. In accordance with this file, the appropriate library, containing the implementation of the csc task, will be found and loaded. The csc task will prepare everything for the call of the compiler itself (usually it is a separate command line utility csc.exe). As we remember, we have a "fake" build, and so when everything is ready, the compiler call will not happen. Roslyn has all the necessary information to get the semantic model now - all the references to other projects and libraries are expanded (as analyzed source code could contain types declared in these dependencies); all the prebuild steps are performed, all the dependencies are restored/copied and so on.

Fortunately, if something went wrong on one of these steps, Roslyn has a reserve mechanism to prepare a semantic model, based on the information available before the start of the compilation, i.e. before the moment when the control was passed to MSBuild Execution API. Typically, this information is gathered from the evaluation of project file (which admittedly is also conducted by a separate MSBuild Evaluation API). Often this information is insufficient to build a complete semantic model. The best example here is a new format of .NET Core projects, where the project file itself doesn't contain anything - even a list of source files, not to mention the dependencies. But even in "normal" .csproj files, we saw the loss of the paths to the dependencies and symbols of conditional compilation (defines) after the failed compilation, although their values were directly written in the project file itself.

Now, as I hope it became a little more clear what happens "inside" PVS-Studio when checking the C# project, let's see what happened after the update of Roslyn and MSBuild. The chart above clearly shows that the Build Tools part from the PVS-Studio point of view is located in the "external environment" and accordingly is not controlled by the analyzer. As described previously, we abandoned the idea to put the whole MSBuild in the distribution, that's why we'll have to rely on what will be installed in the user's system. There can be many variants, as we support the work with all the Visual C# versions, starting with Visual Studio 2010. At the same time, Roslyn became a basis for the C# compiler starting with the previous version of Visual Studio - 2015.

Let's consider the situation when the system where the analyzer is run, doesn't have MSBuild 15 installed. The analyzer is run to check the project under Visual Studio 2015 (MSBuild 14). And now, we see the first flaw of Roslyn - when opening MSBuild project it doesn't specify the correct toolset. If the toolset isn't specified, MSBuild starts using the default toolset - in accordance with the version of the MSBuild library that is used. And since Roslyn 2.0 is compiled with the MSBuild 15 dependence, the library chooses this toolset version.

Due to the fact that this toolset is missing in the system, MSBuild instantiate this toolset incorrectly - we get a "mixture" of non-existent and incorrect paths, pointing to the toolset of the version 4. Why 4? Because this toolset, together with 4-th version of MSBuild is always available in the system as a part of the .NET Framework 4 (in later versions of MSBuild, it was untied from the framework). The result is the selection of an incorrect targets file, incorrect csc task and ultimately, errors in the compilation of the semantic model.

Why haven't we seen such an error on the old version of Roslyn? Firstly, according to the usage statistics of our analyzer, the majority of our users have Visual Studio 2015, i.e. the correct (for Roslyn 1.0) version of MSBuild is already installed.

Secondly, the new version of MSBuild, as I mentioned earlier, no longer uses the registry for storing the configurations, and, in particular, information about the installed toolset. And if all previous versions of MSBuild kept their toolsets in the registry, MSBuild 15 now stores it in config file next to MSBuild.exe. The new MSBuild changed "permanent address" - previous versions were uniformly in c:\Program Files (x 86) \MSBuild\%VersionNumber%, and the new version now is being deployed by default to the installation directory of Visual Studio (which also changed in comparison with previous versions).

This fact sometimes "concealed" wrongly selected toolset in previous versions - semantic model was generated correctly with such an incorrect toolset. Moreover, even if the required new toolset is present in the system, the library we use may not even find - now it is located in app.config file of MSBuild.exe, and not in the registry, and the library is loaded not from the MSBuild.exe process, but from PVS-Studio_Cmd.exe. The new MSBuild has a reserve mechanism for this case. If the system has a COM server installed, where the ISetupConfiguration is implemented, MSBuild will try to find the toolset in the installation directory of Visual Studio. However, the standalone installer of MSBuild, of course, does not register this COM interface - that is done only by the Visual Studio installer.

And finally, the third case, and probably the most important reason was, unfortunately, insufficient testing of our analyzer on various supported configurations, that did not allow us to identify the problem earlier. It occured that on all of the machines for everyday testing we have Visual Studio 2015 \ MSBuild 14 installed. Fortunately, we were able to identify and fix the problem before our clients would have reported it to us.

Once we understood why Roslyn did not work, we decided to try specifying the correct toolset upon the opening of the project. It is a separate question, which toolset should be considered "correct"? We were thinking about this when we started using the same MSBuild APIs to open C++ projects for our C++ analyzer. Since we can devote a whole article to this issue, we will not now dwell on it. Unfortunately, Roslyn does not provide a way to specify which toolset will be used, so we had to modify its own code (additional inconvenience for us, because it won't be possible to just take the ready-to-use NuGet packages). After that, issues disappeared in several projects from our test base. However, we got problems in a bigger number of projects. What went wrong now?

We should note here that all the processes described in the diagram above, occur within a single process of the operating system - PVS-Studio_Cmd.exe. It turned out that when choosing a correct toolset there was a conflict when loading dll modules. Our test version uses Roslyn 2.0, which includes a library Microsoft.CodeAnalysis.dll that also has the version 2.0. At the beginning of the project analysis, the library is already loaded into the memory of the process PVS-Studio_Cmd.exe (our C# analyzer). As we check Visual Studio 2015, we specify a toolset 14.0 when opening the project. Further on, MSBuild finds the correct tasks file and starts the compilation. Since the C# compiler in this toolset (remind you that we are using Visual Studio 2015) uses Roslyn 1.3, so MSBuild attempts to load Microsoft.CodeAnalysis.dll of version 1.3 into the memory of the process. Which it fails to do, as the module of a higher version is already loaded.

What can we do in this situation? Should we try to get the semantic model in a separate process or AppDomain? But to get the model, we need Roslyn (i.e. all those libraries that cause the conflict), but to transfer the model from one process/domain to another can be a non-trivial task, as this object contains references to the compilations and workspaces, from which it was obtained.

A better option would be to move the C# analyzer to a separate backend process from our solution parser that is common fro the C++ and C# analyzers, and to make two versions of such backends using Roslyn 1.0 and 2.0, respectively. But this decision also has some significant disadvantages:

Let me explain the last point in more detail. During the existence of Visual Studio 2015, there were 3 updates, in each of them the Roslyn compiler was updated too - from 1.0 to 1.3. In case of an update to the version 2.1, for example, we'll have to create separate versions of the analyzer backend in case of every minor update of the studio, or the possibilty of the repetition of the version conflict error will remain for the users that have not the latest version of Visual Studio.

I should note that the compilation failed also in those cases when we tried to work with the toolsets not using Roslyn, for example, version 12.0 (Visual Studio 2013). There was a different reason, but we did not dig deeper, because the problems we already saw were enough to reject this solution.

After digging into the causes of these errors, we saw the need to "provide" the toolset of the version 15.0 with the analyzer. It saves us from the problems with the version conflict between the Roslyn components and allows checking projects for all the previous versions of Visual Studio (the latest version of the compiler is backwards compatible with all the previous versions of C# language). A little earlier, I have already described, why we decided not to pull in our installer a "full-fledged" MSBuild 15:

However, when investigating the problems that appeared in Roslyn during the compilation of the projects, we understood that our distribution already contained all the necessary libraries of Roslyn and MSBuild (I should remind that Roslyn performs "a fake" compilation, that's why the compiler csc.exe was not necessary). In fact, for a full-fledged toolset, we were lacking several props and targets files, in which this toolset is described. And these are simple xml files in the formats of MSBuild projects, that take only several megabytes as a whole - we don't have any problem to include these files into the distribution.

The main problem was factually the necessity "to deceive" MSBuild libraries and make them take "our" toolset as a native one. This is a comment in the MSBuild code: Running without any defined toolsets. Most functionality limited. Likely will not be able to build or evaluate a project. (e.g. reference to Microsoft.*.dll without a toolset definition or Visual Studio instance installed). This comment describes the mode in which an MSBuild library works when it is added to a project just as a reference, and it is not used from MSBuild.exe. And this comment doesn't sound much encouraging, especially the "Likely will not be able to build or evaluate a project." part.

So, how can we make the MSBuild 15 libraries use a third-party toolset? Let me remind you that this toolset is declared in the app.config of the MSBuild.exe file. It turned out that you can add the contents of the config to the config of our application (PVS-Studio_Cmd.exe) and set the environment variable MSBUILD_EXE_PATH for our process with the path to our executable file. And this method worked! At that moment the latest version of MSBuild was at Release Candidate 4. Just in case, we decided to see how things were going in the master branch of MSBuild on GitHub. And as if by the Murphy's law, in the master branch in the code of the toolset selection was added a check - to take a toolset from the appconfig only in case when the name of the executable file is MSBuild.exe. Thus, in our distribution there appeared a file 0 bytes, with the name MSBuild.exe, that the environment variable MSBUILD_EXE_PATH of the process PVS-Studio_Cmd.exe points to.

That was not the end of our troubles with MSBuild. It turned out that the toolset itself is not enough for the projects that are using MSBuild extensions - these are additional build steps. For example, these types of projects include WebApplication, Portable .NET's Core projects. When installing the corresponding component in Visual Studio, these extensions are defined in a separate directory next to MSBuild. In our "installation" of MSBuild it was not present. We found the solution due to the ability to easily modify "our own" toolset. To do this, we bound the search paths (the property MSBuildExtensionsPath) of our toolset to a special environment variable, which PVS-Studio_Cmd.exe process specifies according to the type of the checked project. For example, if we have a WebApplication project for Visual Studio 2015, we (supposing that the user's project is compilable) search for the extensions for the toolset version 14.0 and specify the path to them in our special environment variable. These paths are needed to MSBuild only to include additional props\targets to the build scenario, that's why the problems with the version conflicts did not arise.

As a result, the C# analyzer can work on the system with any of the supported versions of Visual Studio, regardless of MSBuild version present. A potential issues would be the presence of the custom user modifications of MSBuild build scenarios, but thanks to the independence of our toolset, these modifications can be made in PVS-Studio provided toolset if necessary.

One of the new features of Visual Studio 2017, allowing to optimize the work with the solutions, containing a large amount of projects, was a mode of a delayed load - "lightweight solution load".

Figure 3 - lightweight solution load

This mode can be enabled in the IDE for a separate solution, and for all the opened solutions. The peculiarity of using the mode "lightweight solution load" is showing only the tree of the projects (without load the projects) in the Visual Studio explorer. The load of the chosen project (the expansion of its inner structure and load of the files in the project) is performed only by request: after the corresponding action of the user (the expansion of the project node in the tree), or programmatically. The detailed description of the lightweight solution load is given in the documentation.

However, we faced several issues, creating the support of this mode:

In this regard, I would like to mention insufficient amount of technical documentation about the use of the lightweight solution load. In fact all the documentation, showing the peculiarities of internal mechanisms of work with new possibilities of VIsual Studio 2017 related to the "lightweight solution load" is limited just to one article.

Speaking about those "pitfalls", while the RC Visual Studio was being refined, the Microsoft didn't only eliminate the defects, but also renamed some methods in the newly added interfaces that we were also using. As a result, we needed to correct the working mechanism of the support of the lightweight solution load after the release of PVS-Studio.

Why in the release version? The thing is that one of the interfaces we use turned out to be declared in the library that is included in Visual Studio two times - one in the main installation of Visual Studio and the second - as a part of Visual Studio SDK package (a package to develop the Visual Studio extensions). For some reason, the Visual Studio SDK developers haven't updated RC version of this library in the release of Visual Studio 2017. As we had SDK installed on practically all the machines (also on the machine running the night builds - it is also used as a build server), we didn't have any issues during the compilation and work. Unfortunately, this bug was fixed after the release of PVS-Studio, when we got a bug report from a user. As for the article, which I wrote about earlier, the mentions of this interface still have the old name by the moment of posting this text.

The release of Visual Studio 2017 became the most "expensive" for PVS-Studio since the moment of its creation. This was caused by several factors - significant changes in the work of MSBuild\Visual Studio, the inclusion of C# analyzer as a part of PVS-Studio (that also needs to be supported now).

When we started working on the static analyzer for C#, we expected Roslyn to allow doing it very quickly. These expectations ware, for the most part, fulfilled - the release of the first version of the analyzer occured after only 4 months. We also thought that, in comparison with our C++ analyzer, the use of a third-party solution would allow us to save on the support of the new capabilities of the C# language appearing during its subsequent evolution. This expectation was also confirmed. Despite all this, the use of a ready-made platform for static analysis wasn't as "painless", as we see now from our experience of the support of new versions of Roslyn/Visual Studio. Solving the questions about the compatibility with the new C# capabilities, Roslyn creates difficulties in completely other spheres due to the fact that it is bound with third - party components (MSBuild and Visual Studio, to be more exact). The bound between Roslyn and MSBuild significantly hinders its use in the standalone static code analyzer.

We are often asked, why don't we "rewrite" our C++ analyzer on the basis of some ready-made solution, Clang, for example. Indeed, this would allow us to remove a number of present day problems in our C++ core. However, apart from the necessity to rewrite the existing mechanisms and diagnostics, we should not also forget that using a third-party solution, there will always be "pitfalls" that will be impossible to foresee.

0