This note is for C++ beginner programmers who are wondering why everyone keeps telling them to use delete[] for arrays. But, instead of a clear explanation, senior developers just keep hiding behind the magical "undefined behavior" term. A tiny bit of code, a few pictures and a glimpse into nuts and bolts of the compilers – if interested, you're welcome to read.

You may not have noticed, or even just not paid attention, but when you write code to release the memory space occupied by arrays, you don't have to enter the number of items to be deleted. And it all works great, though.

int *p = new SomeClass[42]; // Specify the quantity

delete[] p; // Don't specify the quantityWhat is this, magic? Partially, yes. And compiler developers have different approaches to describing and implementing it.

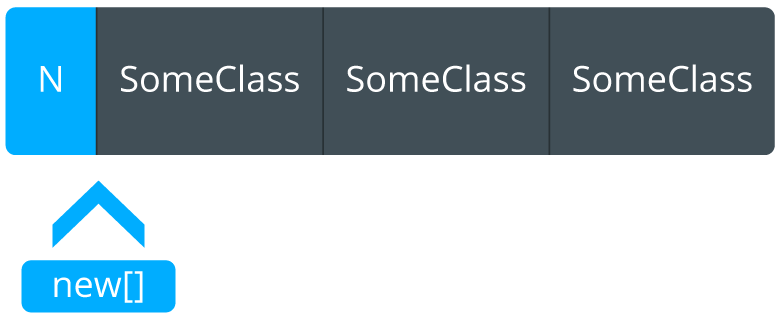

There are two main approaches to the way compilers remember the number of elements in an array:

The first strategy, as the name implies, is done by simply inserting the number of elements before the first element of an array. Note that in this case the pointer you get after executing the operator new will point to the first element of the array, and not its actual beginning.

This pointer in no case should be passed to the usual operator delete. Most likely, it will just remove the first element of the array and leave the others intact. Note that I wrote ''most likely'' for a reason, because no one can predict every possible outcome and the way the program will behave. It all depends on what objects were in the array and whether their destructors did something important. As a result, we get the traditional undefined behavior. This is not what you would expect when trying to delete an array.

Fun fact: in most implementations of the standard library, the operator delete simply calls the free function from within itself. If we pass a pointer to an array into it, we get one more undefined behavior. This is because this function is expecting a pointer from the calloc, malloc or realloc functions. And as we figured out above, it fails because the variable at the beginning of the array is hidden and the pointer is shifted to the beginning of the array.

What's different about the delete[] operator? It just counts the number of elements in an array, calls a destructor for each object, and then deallocates the memory (along with the hidden variable).

In fact, this is basically the pseudocode that delete[] p; turns into when using this strategy:

// Get the number of elements in an array

size_t n = * (size_t*) ((char*)p - sizeof(size_t));

// Call the destructor for each of them

while (n-- != 0)

{

p[n].~SomeClass();

}

// And finally cleaning up the memory

operator delete[] ((char*)p - sizeof(size_t));MSVC, GCC and Clang compilers use this strategy. You can easily verify this by looking at the memory management code in the associated repositories (GCC and Clang) or by using the Compiler Explorer service.

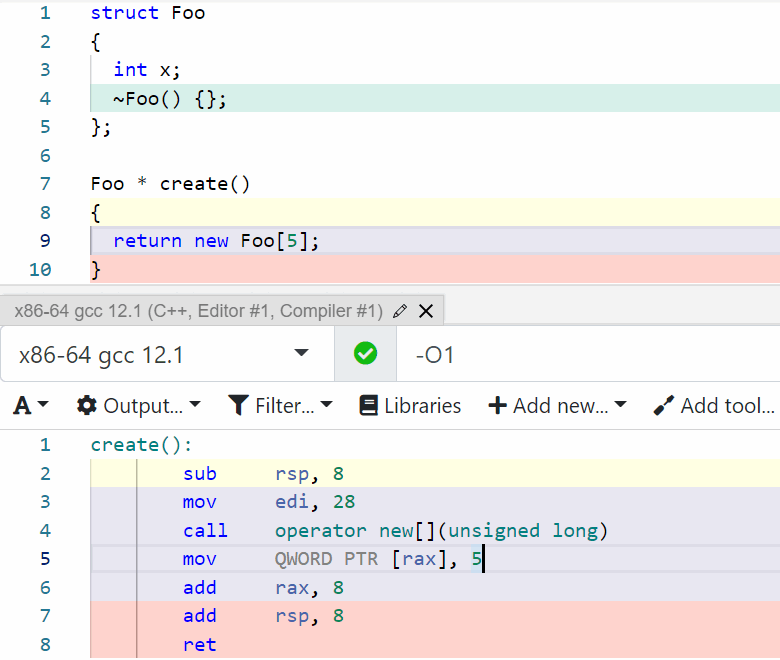

In the picture above (the upper part is the code, the lower part is the assembler output of the compiler), I sketched a simple code fragment in which a structure and function are defined to create an array of these very structures.

Note: the empty destructor of the structure is by no means extra code. In fact, according to Itanium CXX ABI, the compiler should use a different approach to memory management for arrays consisting of objects of trivially destructible types. Actually, there are a few more conditions, and you can see them all in section 2.7 "Array Operator new Cookies" Itanium CXX ABI. It also lists the requirements for where and how the information on the number of elements in an array should be located.

So, what happens in terms of the assembler in short:

The advantages of this method are its easy implementation and performance, but the disadvantage is the fatality of errors with the incorrect choice of the operator delete. At best, the program will crash with the error "Heap Corrupt", and at worst you will be searching long and hard for the cause of the program's weird behavior.

The second strategy involves the presence of a hidden global container that stores pointers to arrays and to the number of elements they contain. In this case there is no hidden data in front of the arrays, and the delete[] p; call is implemented like as follows:

// Getting the size of an array from the hidden global storage

size_t n = arrayLengthAssociation.lookup(p);

// Calling destructors for each element

while (n-- != 0)

{

p[n].~SomeClass();

}

// Cleaning up the memory

operator delete[] (p);Well, it doesn't look as "magical" as the previous way. Are there any other differences? Yes.

Besides the previously mentioned lack of hidden data in front of the array, the need to search for data in the global storage causes a slight slowdown. But we balance this with the fact that the program may be more tolerant with the wrong choice of the operator delete.

This approach has been used in the Cfront compiler. We won't dwell on its implementation, but if you want find out more about one of the first C++ compilers, you can check it out on GitHub.

All of the above are nuts and bolts of the compilers, and you should not rely only on a particular behavior. This is especially true when porting the program to different platforms is planned. Fortunately, there are several options for how to avoid this type of errors:

If you are curious about undefined behavior and specific features of compilers, then I can recommend some extra material:

0