On August 25th, 2021, the Linux kernel celebrated its 30th anniversary. Since then, it's changed a lot. We changed too. Nowadays, the Linux kernel is a huge project used by millions. We checked the kernel 5 years ago. So, we can't miss this event and want to look at the code of this epic project again.

Last time we found 7 peculiar errors. It's noteworthy that this time we've found fewer errors!

It seems strange. The kernel size has increased. The PVS-Studio analyzer now has dozens of new diagnostic rules. We've improved internal mechanisms and data flow analysis. Moreover, we introduced intermodular analysis and much more. Why has PVS-Studio found fewer exciting errors?

The answer is simple. The project quality has improved! That's why we are so excited to congratulate Linux on its 30th anniversary.

The project infrastructure was significantly improved. Now you can compile the kernel with GCC and Clang – additional patches are not required. The developers are improving automated code verification systems (kbuild test robot) and other static analysis tools (GCC -fanalyzer was implemented; the Coccinelle analyzer is enhanced, the project is checked through Clang Static Analyzer).

However, we found some errors anyway :). Now we're going to take a look at some really good ones. At least, we consider them "nice and beautiful" :). Moreover, it's better to use static analysis regularly, not once every five years. You won't find anything that way. Learn why it's important to use static analysis regularly in the following article: "Errors that static code analysis does not find because it is not used."

First, let's discuss how to run the analyzer.

Since now you can use the Clang compiler to compile the kernel, a special infrastructure was implemented in the project. It includes the compile_commands.json generator that creates the JSON Compilation Database file from .cmd files generated during the build. So, you need to compile the kernel to create the file. You do not have to use the Clang compiler but it's better to compile the kernel with Clang because GCC may have incompatible flags.

Here's how you can generate the full compile_commands.json file to check the project:

make -j$(nproc) allmodconfig # full config

make -j$(nproc) # compile

./scripts/clang-tools/gen_compile_commands.pyThen, you can run the analyzer:

pvs-studio-analyzer analyze -f compile_commands.json -a 'GA;OP' -j$(nproc) \

-e drivers/gpu/drm/nouveau/nouveau_bo5039.c \

-e drivers/gpu/drm/nouveau/nv04_fbcon.cWhy exclude these 2 files from the analysis? They contain a large number of macros that expand into huge lines of code (up to 50 thousand characters per line). The analyzer processes them for a long time, and the analysis may fail.

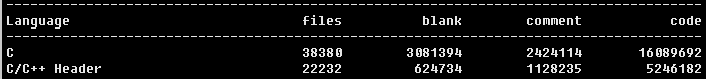

The recent PVS-Studio 7.14 release provides intermodular analysis for C/C++ projects. We could not miss the opportunity to try it out. Moreover, on such a huge code base:

Undoubtedly, the numbers are impressive. The overall project contains almost 30 million lines of code. When we first checked the project in this mode, we failed: when the intermodular information was merged, the RAM was overloaded, and the OOM-killer killed the analyzer process. We researched what happened and came up with a solution. We're going to include this important fix in the PVS-Studio 7.15 release.

To check the project in the intermodular mode, you need to add one flag to the pvs-studio-analyzer command:

pvs-studio-analyzer analyze -f compile_commands.json -a 'GA;OP' -j$(nproc) \

--intermodular \

-e drivers/gpu/drm/nouveau/nouveau_bo5039.c \

-e drivers/gpu/drm/nouveau/nv04_fbcon.cAfter the analysis, we get a report with thousands of warnings. Unfortunately, we didn't have time to configure the analyzer to exclude false positives. We wanted to publish the article right after the Linux kernel birthday. Therefore, we limited ourselves to the 4 exciting errors we found in an hour.

However, it's easy to configure the analyzer. Failed macros are responsible for most warnings. A little later, we'll filter the report and review it in depth. We hope to provide you with an opportunity to read another detailed article about the errors we found.

V595 The 'speakup_console[vc->vc_num]' pointer was utilized before it was verified against nullptr. Check lines: 1804, 1822. main.c 1804

static void do_handle_spec(struct vc_data *vc, u_char value, char up_flag)

{

unsigned long flags;

int on_off = 2;

char *label;

if (!synth || up_flag || spk_killed)

return;

....

switch (value) {

....

case KVAL(K_HOLD):

label = spk_msg_get(MSG_KEYNAME_SCROLLLOCK);

on_off = vt_get_leds(fg_console, VC_SCROLLOCK);

if (speakup_console[vc->vc_num]) // <= check

speakup_console[vc->vc_num]->tty_stopped = on_off;

break;

....

}

....

}The analyzer issues a warning because the speakup_console[vc->vc_num] pointer is dereferenced before the check. Glancing through the code, you may think it's a false positive. In fact, we do have a dereference here.

Guess where? :) The dereference happens in the spk_killed macro. Yeah, the variable has nothing to do with this, as it may seem at first glance:

#define spk_killed (speakup_console[vc->vc_num]->shut_up & 0x40)Most likely the programmer who changed this code did not expect dereferences. So, they made a check because somewhere a null pointer is passed. Such macros that look like variables and are not constants, make it difficult to maintain code. They make code more vulnerable to errors. Marcos are evil.

V519 The 'data' variable is assigned values twice successively. Perhaps this is a mistake. Check lines: 6208, 6209. cik.c 6209

static void cik_enable_uvd_mgcg(struct radeon_device *rdev,

bool enable)

{

u32 orig, data;

if (enable && (rdev->cg_flags & RADEON_CG_SUPPORT_UVD_MGCG)) {

data = RREG32_UVD_CTX(UVD_CGC_MEM_CTRL);

data = 0xfff; // <=

WREG32_UVD_CTX(UVD_CGC_MEM_CTRL, data);

orig = data = RREG32(UVD_CGC_CTRL);

data |= DCM;

if (orig != data)

WREG32(UVD_CGC_CTRL, data);

} else {

data = RREG32_UVD_CTX(UVD_CGC_MEM_CTRL);

data &= ~0xfff; // <=

WREG32_UVD_CTX(UVD_CGC_MEM_CTRL, data);

orig = data = RREG32(UVD_CGC_CTRL);

data &= ~DCM;

if (orig != data)

WREG32(UVD_CGC_CTRL, data);

}

}The example is taken from the driver code for Radeon video cards. Since the 0xfff value is used in the else branch, we can assume that this is a bit mask. At the same time, in the then branch, the value received in the above line is overwritten without applying a mask. The right code is likely to be as follows:

data = RREG32_UVD_CTX(UVD_CGC_MEM_CTRL);

data &= 0xfff;

WREG32_UVD_CTX(UVD_CGC_MEM_CTRL, data);V610 Undefined behavior. Check the shift operator '>>='. The right operand ('bitpos % 64' = [0..63]) is greater than or equal to the length in bits of the promoted left operand. master.c 354

// bitsperlong.h

#ifdef CONFIG_64BIT

#define BITS_PER_LONG 64

#else

#define BITS_PER_LONG 32

#endif /* CONFIG_64BIT */

// bits.h

/*

* Create a contiguous bitmask starting at bit position @l and ending at

* position @h. For example

* GENMASK_ULL(39, 21) gives us the 64bit vector 0x000000ffffe00000.

*/

#define __GENMASK(h, l) ....

// master.h

#define I2C_MAX_ADDR GENMASK(6, 0)

// master.c

static enum i3c_addr_slot_status

i3c_bus_get_addr_slot_status(struct i3c_bus *bus, u16 addr)

{

int status, bitpos = addr * 2; // <=

if (addr > I2C_MAX_ADDR)

return I3C_ADDR_SLOT_RSVD;

status = bus->addrslots[bitpos / BITS_PER_LONG];

status >>= bitpos % BITS_PER_LONG; // <=

return status & I3C_ADDR_SLOT_STATUS_MASK;

}Note that the BITS_PER_LONG macro can be 64-bit.

The code contains undefined behavior:

Perhaps, the author wanted to reduce the number of lines and declared the bitpos variable next to the status variable. However, the programmer did not take into account that int has a 32-bit size on 64-bit platforms, unlike the long type.

To fix this, declare the status variable with the long type.

V522 Dereferencing of the null pointer 'item' might take place. mlxreg-hotplug.c 294

static void

mlxreg_hotplug_work_helper(struct mlxreg_hotplug_priv_data *priv,

struct mlxreg_core_item *item)

{

struct mlxreg_core_data *data;

unsigned long asserted;

u32 regval, bit;

int ret;

/*

* Validate if item related to received signal type is valid.

* It should never happen, excepted the situation when some

* piece of hardware is broken. In such situation just produce

* error message and return. Caller must continue to handle the

* signals from other devices if any.

*/

if (unlikely(!item)) {

dev_err(priv->dev, "False signal: at offset:mask 0x%02x:0x%02x.\n",

item->reg, item->mask);

return;

}

// ....

}Here we have a classic error: if the pointer is null, we receive an error message. However, the same pointer is used when a message is formed. Of course, it's easy to detect the same kind of errors at the testing stage. But this case is slightly different – judging by the comment, the dereference can happen if "a piece of hardware is broken". In any case, it is bad code, and it must be fixed.

The Linux project check was an exciting challenge for us. We managed to try a new feature of PVS-Studio – intermodular analysis. The Linux kernel is a great world-famous project. Many people and organizations fight for its quality. We are happy to see that the developers keep refining the kernel quality. And we are developing our analyzer too! Recently, we opened our images folder. It demonstrated how the friendship of our analyzer with Tux started. Just take a look at these pictures!

Unicorn N81:

Unicorn N57:

An alternative unicorn with penguin N1:

Thanks for your time! Try to check your project with PVS-Studio. Since the Linux kernel turns 30, here's a promo code for a month: #linux30.

0