The concept of clean code is familiar to many people. Some programmers support it, while others think it hurts the industry. Casey Muratori, who's in the second group, stated that clean code is harmful advice for performance-conscious programmers. In this article, we'll look at the dispute between the concept founder, Robert Martin, and Casey Muratori to get to the heart of the matter.

"Honest disagreement is often a good sign of progress."

Mahatma Gandhi

Clean code is a phenomenon that has evolved from a set of rules for writing quality code into the whole philosophy. I think every developer on Earth knows about it. However, there are some arguments that clean code is just a fancy bow wrapper that hurts the tech industry.

The PVS-Studio team adheres to the concept of writing quality code, so we're interested in delving deeper into this topic and finding out who's right.

In the first part in the series of articles, I felt it was important to dig into Robert Martin and Casey Muratori's discussion of clean code and performance, as it's the most comprehensive debate on the topic. Those involved in the story don't make you question their expertise:

Robert Martin aka Uncle Bob is a software engineer, programmer, instructor, and author of software development books ("Clean Code", "The Clean Coder", "The Clean Architecture", etc.). He also co-authored the Manifesto for Agile Software Development and is the creator of the SOLID principles.

Casey Muratori is a programmer who specializes in game engine research and development. He's worked on projects that've been widely used in various game franchises, like Destiny, Age of Empires, Gears of War, etc.

It seems that Casey Muratori's video has triggered all further events ("Clean" Code, Horrible Performance).

Note. Along with the video, came the video script that I'll quote below.

In this video, Casey asks whether clean code is necessary and points out the performance degradation it causes:

If you look at a "clean" code summary and pull out the rules that actually affect the structure of your code, you get:

— prefer polymorphism to "if/else" and "switch";

— code should not know about the internals of objects it's working with;

— functions should be small;

— functions should do one thing;

— "DRY" — Don't Repeat Yourself.

These rules are rather specific about how any particular piece of code should be created in order for it to be "clean". What I would like to ask is, if we create a piece of code that follows these rules, how does it perform?

Next, he takes sample code for the experiment. It contains the base shape class and the classes that inherit from it: a circle, triangle, rectangle, and square. The code example follows the clean code rules, including those described above.

There's a specific task for the hierarchy: we need to find the total surface area of all the shapes. Casey gives a "clean" solution to the problem. He then deliberately deviates from the clean code rules: he uses switch instead of polymorphism, exposes details of the used objects to functions, gives them multiple tasks, and doesn't limit their size.

As a result, the final version of the program runs 15 times faster than the original version that followed the clean code rules. Casey makes some interesting comparisons in describing this:

That's like pushing 2023 hardware all the way back to 2008!

By the way, Casey found the Don't Repeat Yourself (DRY) concept to be quite useful:

Honestly, "don't repeat yourself" seems fine. As you saw from the listings, we didn't really repeat ourselves much. <...> If "DRY" means something more stringent — like don't build two different tables that both encode versions of the same coefficients — well then I might disagree with that sometimes, because we may have to do that for reasonable performance. But if in general "DRY" just means don't write the exact same code twice, that sounds like reasonable advice. And most importantly, we don't have to violate it to write code that gets reasonable performance.

At the end of the article, the author states that it's impossible to get sufficient performance when using the clean code rules:

We can still try to come up with rules of thumb that help keep code organized, easy to maintain, and easy to read. Those aren't bad goals! But these rules ain't it. They need to stop being said unless they are accompanied by a big old asterisk that says, "and your code will get 15 times slower or more when you do them."

The content couldn't go unnoticed. After the video was released, it seemed like everyone was talking about it. As I mentioned earlier, questions about clean code performance have been raised before, but Casey Muratori's video is like a manifesto of those discussions.

Note. I intentionally don't reveal all the details of the source because its author has already done it before me. So, there's no point in repeating Casey's arguments, and all the more reason to read the source. It's really interesting.

I'm not exaggerating or overstating how important Casey Muratori's video is, because Robert Martin, the ambassador of clean code (there's a grain of truth in every joke), responded to the arguments made in it.

Martin and Muratori's discussion took place in the GitHub repository and attracted quite a few developers. Next, I'll try to pick out the most interesting dialog parts and spice it all up with a handful of my own comments.

The discussion began from the get-go. Casey started by asking some targeted questions:

Most explanations on Clean Code I have seen from you include all the things I mentioned in the video - preferring inheritance hierarchies to if/switch statements, not exposing internals (the "Law of Demeter"), etc. But it sounds like you were surprised to hear me say that. Could you take a minute before we get started to explain more fully your ideas on type design, so I can get a sense for where the disconnect is here?

Robert's reaction was predictable and understandable:

<...> Yes, absolutely, the structures you were presenting are not the best way to squeeze every nanosecond of performance out of a system. Indeed, using those structures can cost you a lot of nanoseconds. They are not efficient at the nanosecond level. <...> But the kinds of environments where that kind of parsimony is important are nowadays few and far between. The vast majority of software systems require less than 1% of a modern processor's power. <...> It is economically better for most organizations to conserve programmer cycles than computer cycles. So, if there is a disconnect between us, I think it is only in the kinds of contexts that we prioritize.

In this dialog, I found Martin's answer to the question somewhat enlightening. After all, the clean code concept has never been about writing the most efficient code possible. Writing code that follows the clean code rules ensures its quality in the long run and saves the programmers' energy and mental well-being who'll be working on it.

However, that didn't stop Casey, and the discussion continued:

Just to get a little bit more concrete, so I understand what you're saying here, can we pick some specific software examples? For instance, I would assume we are both familiar with using Visual Studio and CLANG/LLVM. Would those both be reasonable examples of what you are calling the vast majority of software that requires less than 1% of a modern processor?

Uncle Bob brought up a rather interesting topic in his answer to this question. He suggested some classification of program modules according to the need for optimization:

<...> IDE's are interesting systems in that they span a huge domain of contexts. There are portions in which nanoseconds are extremely important, and other parts where they matter very little. <...> Making sure that the parsing code preserves nanoseconds can have a big effect. On the other hand, the code that sets up a configuration dialog does not need even a tiny fraction of that kind of efficiency.

So, the performance-squeezing effect differs from one context to another. We can infinitely speed up code, break tasks into threads and processes, etc. However, at some point, we'll still depend on I/O devices, getting data from the network, and so on. And somewhere, the extra nanoseconds won't have any impact on the product end user.

To the same topic, Martin added a general classification of software by performance needs. For example, why would a small Python calendar application need to push for maximum performance?

Then, after some elaborations, the discussants agreed on the issue—not all code has to be high-performant.

Casey Muratori returned to the original question and gave it a bit more detail:

Given all of that, I'd like to return to basically the original question: why were you surprised that people, such as myself, associated "Clean Code" with effectively the opposite of what you have written here with respect to performance? None of the things I just listed are given prominent placement in your teachings. I'm not suggesting that you can't find a sentence here or there in a book or blog post that gives a nod to performance, of course. But by volume, the things you're saying here get barely a nod.

Casey refined the question so much that he even cited a particular series of Martin's lectures where Uncle Bob didn't mention clean code performance issues at all.

In fact, Uncle Bob accepted the criticism:

Frankly, I think that's a fair criticism. And, as it happens, I taught a class yesterday in which I spent more time talking about the performance costs, as well as the productivity benefits, of the disciplines and principles that I teach. So, thank you the nudge. <...> You asked me whether I had been taking the importance of performance for granted. After some self-reflection I think that's likely. I am not an expert in performance. My expertise is in the practices, disciplines, design principles, and architectural patterns that help software development teams efficiently build and maintain large and complex software systems. And as every expert knows, and must fight against, expert hammers think everything looks like a nail.

The question is curious because it automatically pops into my head that clean code is not for performance. Uncle Bob faced the same issue. This is something the dev community needs to talk about, and that's why we're here now. Martin came to the same conclusion:

That being said, I'm finding this conversation to be more beneficial than I had initially anticipated. It has nudged a change in my perspective. You should not expect that change to be enormous. You should not expect me to make videos about how horrible Clean Code is ;-). But if you watch the next 9-hour suite of videos I make, you'll probably see more than "barely a nod" towards performance issues. I think you can expect two or three nods.

Casey took that answer and let Robert decide whether or not to continue the discussion:

Honestly the nudge was most of what I hoped to accomplish here :) <...> So we could definitely end the conversation here. If you'd like to keep it going, the next thing to talk about would be the "strident denigration" you referred to. That would take us into architecture territory, not merely performance, but I'm happy to go there if you'd like. You're choice!

It's ironic that the performance issues emerged right during the dialog—the issues they could even get their hands on!

Casey mentioned that when he typed in the GitHub web editor, the delay between hitting a key and seeing a character appear on the screen was quite long. In the video that Casey attached to his post, you can see that the delay causes an inconvenience. Such issues occur even with the rather fast Zen2 chip.

That's where the investigation began!

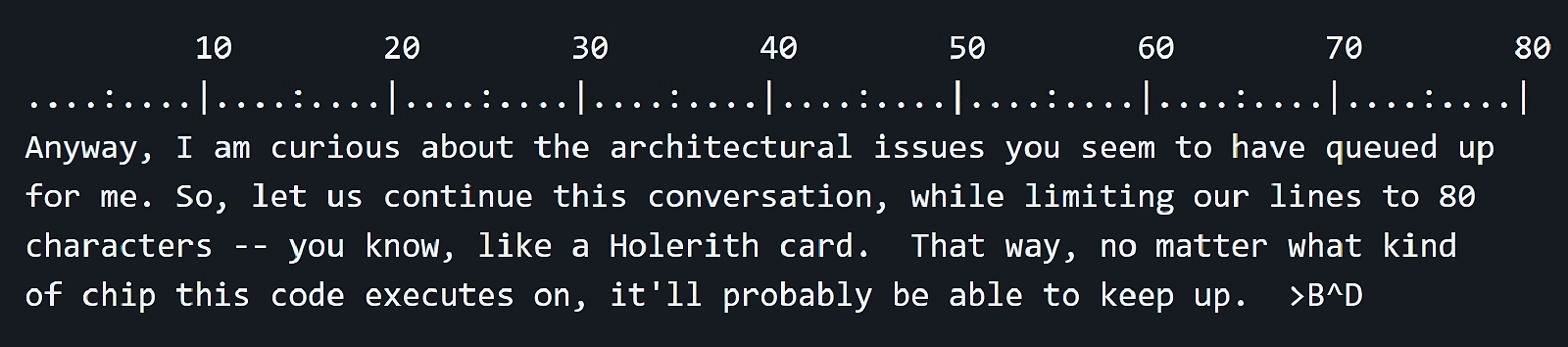

Robert assumed that the issue was the paragraph length:

Now why would that be? First of all, I imagine that we are both typing into the same javascript code. After all, nobody wants to use the tools written into the browser anymore ;-) I mean, JavaScript is just so much better. Secondly, I also imagine that the author of this code never anticipated that you and I would pack whole paragraphs into a single line. (Note the line numbers to the left.) Even so, the lag becomes very apparent at the 25cps rate by about 200-300 characters. So what could be going on?

To fix the issue, he suggested hitting return at the end of each line:

However, Casey accurately detected the flaw by checking the Performance tab in Chrome:

It's the "emoji picker"! And your guess about the kind of problem was not far off.

This feature scans the typed text from right to left for emojis. So, if a paragraph is too long, it's impossible to use the feature without degrading the user experience with the editor. Casey found a temporary solution:

Whenever we get to the point in a paragraph where the github text editor becomes prohibitively slow, we can just pretend we are starting a new emoji and this solves the problem by preventing the picker from scanning as far backward. We can do this by just typing a colon, because that's the thing that normally starts emoji! For example, right now this paragraph is starting to be really sluggish. So, I'm just going to put a colon right here: and voila!

Uncle Bob checked it:

Don't:you:just:love:being:a:programmer:and:diagnosing:interesting:problems:

from:the:symptoms?

To:do:that:well:you:have:to:think:like:a:programmer.::

....While it may not be directly related to the main topic, I thought it was important to share, because it reveals some of the discussants' motivations. Robert and Casey are desperate researchers who've come together not to question each other's expertise, but to get to the bottom of an issue and figure out what to do next.

They continued after revealing the secrets of typing on GitHub. Casey shifted the discussion to architectural issues, starting with points from Clean Code that he agreed with. The first is readability, or as Casey said, "basic code readability".

I think most developers agree with this rule as well. It's helpful if code that reads from top to bottom and tells you what it does and where it does.

However, Casey said that in some cases, variables named following a simple pattern a, b, c, etc. may well be acceptable. A little further on, Martin puts it more clearly:

My rule for variable names is that the length of a variable's name should be proportional to the size of the scope that contains it. <...> BTW, my rule for functions is the opposite. The name of a function (or a class) should be inversely proportional to the size of the scope that contains it.

In other words, it's fine to use a variable named i in a small loop, but if we have a larger scope, we should make the name more explicit. It's the opposite case with functions, mostly for convenience. If they're used in many fragments, then calling functions with short names faster and easier.

The second point Casey brought up was the tests. Again, the discussants agreed that they're helpful and necessary, but Casey pointed out a specific divergence in understanding tests:

So, I think we're roughly on the same page about tests. Where we might differ is to what extent tests "drive" the development process. I get the sense that your perspective is "write the tests first", or something close to that. I don't know that I would go that far. I tend to develop first, and as I find things where I think a test would help prevent regressions, I'll add the test then.

This divergence is really easy to spot. Uncle Bob often mentions the extreme programming and the Test-Driven Development (TDD) methodology in his books. He said this methodology is one of the best ways to handle tests.

In his response, Casey said that firstly, it's quite a difficult task to test everything automatically because there may be many things in code that tests can't check. Secondly, tests are supposed to mitigate development time. Although, if we write tests that aren't used or don't find bugs, we won't save money and will waste a lot of effort and time on writing those tests.

However, Martin had his own understanding of why tests are necessary:

I am perhaps a bit more rigorous in the way I write tests. I tend to write failing tests first, and then make them pass, in a very short cycle. This helps me think through the problem. It also gives me the perspective of writing the code after something has used it. It often forces me to decouple elements from each other so that they can be independently tested. Finally, it leaves me with a suite of tests that has very high coverage, and that I have seen both pass and fail. And that means I trust that test suite.

Uncle Bob understood the issue of not being able to test the whole code. He admitted that he keeps parts of the code without tests for this reason.

Martin's main reason for using the "test first" rule is his confidence that when changes are made to the code, tests immediately show what's broken. So, we can quickly refactor the code covered by tests, enhance its performance, and so on:

I consider it to be the equivalent of double-entry bookkeeping for accounting. I try to say everything twice and make sure the two statements agree.

On the issue of testing, the discussion participants agreed, and Martin summarized their little dispute as follows:

It seems the difference between us can be stated as follows:

— I write tests unless there is a good reason not to.

— You write tests when there is a good reason to.

After discussing a rich list of topics, Casey suggested moving on to the most important one:

So perhaps we can move on to something we will disagree on, which is "classes vs. switch statements"? Of course, those are just particular implementations of a more general divide, which is operand-primal vs. operation-primal architecture.

He said that clean code is more likely to be operand-primal, and it follows the basic OOP concepts. So, the methodology prioritizes adding new types for existing operations over adding new operations for existing types. Although Casey worked with such code and saw how common it is in the tech industry, he still didn't see the benefits of this approach.

Uncle Bob clarified a few concepts and aligned them with the ideas in the Clean Code book. In the "Objects and Data Structures" chapter, he covered polymorphism usability. There, he also subdivided code into two types: procedural and object-oriented. These definitions would be used in the discussion.

Then, Martin sequentially explained what pros and cons OO has in such a context:

From the point of view of counting nanoseconds, OO is less efficient. You made this point in your video. However, the cost is relatively small if the functionality being deployed is relatively large. The shape example, used in your video, is one of those cases where the deployed functionality is small. On the other hand, if you are deploying a particular algorithm for calculating the pay of an employee, the cost of the polymorphic dispatch pales in comparison to the cost of the deployed algorithm.

From the point of view of programmer effort, OO may or may not be less efficient. It could be less efficient if you knew you had all the types. In that case switch statements make it easier to add new functions to those types. You can keep the code organized by function rather than type. And that helps at a certain level of cognition. All else being equal, programs are about functions. Types are artificial. And so, organizing by function is often more intuitive. But all else is seldom equal...

From the point of view of flexibility and on-going maintenance, OO can be massively more efficient. If I have organized my code such that types define the basic functionality, and variations in that functionality can be relegated to subtypes, then adding new variations to existing functionality is very easy and requires a minimum of modification to existing functions and types. I am not forced to find all the switch statements that deploy functions over types and modify all those modules. Rather I can create a new subtype that has all the variations gathered within it. I add that new module to the existing system, without having to modify many other modules.

So, assuming the last point, OO helps programmers comply with the Open-Closed Principle (OCP), even if it doesn't happen in all cases.

However, Martin pointed out another detail here: the switch usage may create an outbound chain of dependencies to lower-level modules. As a result, if a programmer makes any change to one of the lower-level modules, it leads to recompiling and redeploying the switch statement and all the higher-level dependent modules.

But, if a programmer uses OO, there's a dependency inversion that prevents changes in the lower levels from causing the previously described wave of recompilation and redeployment. It was this point that Uncle Bob called his main architectural argument.

However, Casey Muratori had a different take on it. He assumed that if we use polymorphism, we'll need to recompile just one file when moving from n to n + 1. If we use switch, that contains the m functions with n cases, to move to n + 1 types, we'll need to go through all these functions and add a new case there—that will require recompiling the m files. The problem is that adding a new operation will cause the opposite effect. If a developer uses switch, they'll need to add only the m + 1 function. Whereas using polymorphism, they'll need to go through all classes and add an operation there, i.e. recompile the m files vs just one when using switch.

Casey said that in this case, Robert turned the situation slightly in his favor, leaving other details unmentioned:

Note that in both cases you are always adding the same number of things (member functions or cases). The only question is how spread out they were. Both cases have one type of change where the code is clustered together, and one type of change where the code is scattered, and they are exactly "inverted" in which one it is. But neither gets a win, because they are both equally good or equally bad when you consider both types of additions (types and operations).

Then, Robert Martin suggested bringing into the discussion a hypothetical compiler that converts procedural and object-oriented code into the same binary code. That should help them take the topic away from performance directly to architecture. However, Casey deemed it unnecessary:

It doesn't really address how we're supposed to write actual code, which is what we're talking about here.

Uncle Bob also highlighted that the styles discussed make little difference in source file management, and the choice here is more a matter of taste. However, the dependency inversion creates that vast difference. Casey shortened these discussions for clarity:

Run-time: Favors operation-primal

Source code: No difference

Dependency graph: Favors operand-primal

Casey wanted to spot what was the benefit of the dependency inversion from Robert's perspective. Uncle Bob replied that separating lower-level modules from high-level ones is essential only for humans—machines don't care about that. He also explained why this separation is needed:

— It allows us to use, modify, and replace low level details (e.g. IO devices) without impacting the source code of the high level policy.

— It allows us to compile high level components without needing the compiler to read the low level components. This protects the high level components from changes to those low level components.

— It allows us to set up a module hierarchy that has no dependency cycles. (No need for #ifndefs in the header files. ;-) Which keeps the order of compiles and deployments deterministic.

— It allows us to break up our deployments between high level and low level components. So, for example, we could have a high level DLL/JAR/EPROM and a low level DLL/JAR/EPROM. (I mention the EPROM because I used precisely this kind of separation in the early 80s with embedded hardware that had to be maintained in the field. Shipping one 1K EPROM was a lot better than shipping all 32 ;-)

— It allows us to organize our source code by level, isolating higher level functionalities from lower level functionalities. At every level we eschew the lower level concerns, relegating them to source code modules that the current level does not depend upon. This creates a hierarchy of concerns that is, in human terms, intuitive and easy to navigate.

To clarify whether the separation described earlier is possible in both types of architecture, Robert Martin showed the Java code fragment:

public class Payroll {

public void doPayroll(Date payDate) {

for (Employee e : DB.getAllEmployees()) {

if (isPayDay(e, payDate)) {

Paycheck check = calculatePaycheck(e, payDate);

pay(e, check);

}

}

}

}This module, a payroll system, is high-level. It doesn't describe here all the details of how salaries are paid. They are deliberately hidden, and import doesn't disclose any module that implements those details.

Martin's question was whether it's possible to do the same in the procedural architecture. Casey had previously mentioned union, but Robert supposed that it wouldn't help hide the implementation details.

Casey answered that there isn't much difference between hierarchies and union in terms of detail disclosure:

There is no need for any of the things that you're suggesting to be exposed. The only reason you would expose them is if you would like to get the performance increase that comes from allowing the compiler to optimize across the call.

He also backed up his words with the example of a typical header file:

struct file;

file *Open(...);

void Read(file *File, ...);

void Close(file *File);Casey argued that the non-hierarchy method has fewer constraints across the interface boundary. In the case of hierarchy, the dispatch code is moved outside the library boundary, i.e. the implementation detail is pushed across the boundary.

Martin corrected him about the "hierarchy" term, as Casey most likely meant the "interface/implementation" approach, not deep inheritance hierarchies. Uncle Bob agreed that the .c/.h split hides the implementation well because, in C, it's the dependency inversion.

After clarifying some details, Robert wrote that the dialog went from specifics to individual preference and offered to wrap it up.

Btw, I honestly felt that the discussion was moving in a strange direction. Opponents were so passionate about what they were talking about that the vector of the debate shifted significantly.

However, Casey suggested that they should continue to discuss at what point clean code could save programmers' time.

Martin responded that many programming styles can both save developers' time and waste a lot of it. To illustrate it, he attached this code fragment:

#include <stdio.h>

main(tp,pp,ap)

int tp;

char **pp;

char **ap;

{int t=tp;int _=pp;char *a=ap;return!0<t?t<3?main(-79,-13,a+main(-87,1-_,

main(-86, 0, a+1 )+a)):1,t<_?main(t+1, _, a ):3,main ( -94, -27+t, a

)&&t == 2 ?_<13 ?main ( 2, _+1, "%s %d %d\n" ):9:16:t<0?t<-72?main(_,

t,"@n'+,#'/*{}w+/w#cdnr/+,{}r/*de}+,/*{*+,/w{%+,/w#q#n+,/#{l,+,/n{n+\

,/+#n+,/#;#q#n+,/+k#;*+,/'r :'d*'3,}{w+K w'K:'+}e#';dq#'l q#'+d'K#!/\

+k#;q#'r}eKK#}w'r}eKK{nl]'/#;#q#n'){)#}w'){){nl]'/+#n';d}rw' i;# ){n\

l]!/n{n#'; r{#w'r nc{nl]'/#{l,+'K {rw' iK{;[{nl]'/w#q#\

n'wk nw' iwk{KK{nl]!/w{%'l##w#' i; :{nl]'/*{q#'ld;r'}{nlwb!/*de}'c \

;;{nl'-{}rw]'/+,}##'*}#nc,',#nw]'/+kd'+e}+;\

#'rdq#w! nr'/ ') }+}{rl#'{n' ')# }'+}##(!!/")

:t<-50?_==*a ?putchar(a[31]):main(-65,_,a+1):main((*a == '/')+t,_,a\

+1 ):0<t?main ( 2, 2 , "%s"):*a=='/'||main(0,main(-61,*a, "!ek;dc \

i@bK'(q)-[w]*%n+r3#l,{}:\nuwloca-O;m .vpbks,fxntdCeghiry"),a+1);}I think it's important that clean code isn't just dynamic polymorphism. Martin said this too and also mentioned naming heuristics, dependency management, code organization, and others.

Casey continued his question by offering specific options for class substitutions:

But what I am trying to get at is which practices make the dynamically-linked system more or less likely to "save programmer cycles". I would argue - very strongly in this case specifically, but in most cases generally - that enums/flags and if/switches are much better than classes for this design. I think they are much better along every axis. They will be faster, easier to maintain, easier to read, easier to write, easier to debug, and result in a system that is easier for the user to work with as well.

Casey suggested that Robert would imagine an OS interface for I/O designed according to clean code principles.

Uncle Bob answered the same way he once did: create the File abstraction with open, close, read, write, and seek operations. The I/O devices were categorized as types, so it didn't matter which device was used in the future. Thanks to this organization, it's possible to implement the following program to copy one device to another without knowing what the device is:

void copy() {

int c;

while((c=getchar()) != EOF)

putchar(c)

}Uncle Bob suggested looking at the same interface but implemented with switch:

#include "devids.h"

#include "console.h"

#include "paper_tape.h"

#include "...."

#include "...."

#include "...."

#include "...."

#include "...."

void read(file* f, char* buf, int n) {

switch(f->id) {

case CONSOLE: read_console(f, buf, n); break;

case PAPER_TAPE_READER: read_paper_tape(f, buf n); break;

case....

case....

case....

case....

case....

}

}We can see that the more modules there are, the more statements there will be. The number of outbound dependencies also grows, so there are five such files in total. In other words, each file should be updated if a new device is added. There's also the need to recompile files that include devids.h.

However, Martin said there's a time and place for both switch and dynamic polymorphism.

Casey asked Robert to elaborate on this example and explain how the approach from a mixture of the SOLID principles and clean code could save programmers' time. And Martin did it!

First, he implemented the base class:

#include "file.h"

class raw_device {

public:

virtual file* open(char* name) = 0;

virtual void close(file* f) = 0;

virtual void read(file* f, size_t n, char* buf) = 0;

virtual void write(file* f, size_t n, char* buf) = 0;

virtual void seek(file* f, int n) = 0;

virtual char* get_name() = 0;

}Next, he added the I/O driver:

#include "raw_device.h"

class new_device : public raw_device {

public:

virtual file* open(char* name);

virtual void close(file* f);

virtual void read(file* f, size_t n, char* buf);

virtual void write(file* f, size_t n, char* buf);

virtual void seek(file* f, int n);

virtual void get_name();

}And here's the final implementation:

#include "new_device.h"

file* new_device::open(char* name) {...}

void new_device::close(file* f) {...}

void new_device::read(file* f, size_t n, char* buf) {...}

void new_device::write(file* f, size_t n, char* buf) {...}

void new_device::seek(file* f int n) {...}

void new_device::get_name() {return "new_device";}All that remained was to add a module to create the I/O driver instances and load them into the device map:

io_driver_loader.cc

#include "new_device.h"

#include "new_device2.h"

#include "new_device3.h"

...

void load_devices(device_map& map) {

map.add(new new_device());

map.add(new new_device2());

map.add(new new_device3());

...

}Then he counted how many operations he'd need to add a new module:

However, in the case of using switch, there are more operations:

Casey made a few adjustments. Firstly, the task was a bit easier, as Uncle Bob was to create a raw device without a file. That's why, the driver abstraction got a little smaller:

class raw_device {

public:

virtual void read(size_t offset, size_t n, char* buf) = 0;

virtual void write(size_t offset, size_t n, char* buf) = 0;

virtual char* get_name() = 0; // return the name of this device.

}So, the driver implementation changed as well:

#include "raw_device.h" // from the DDK

class new_device : public raw_device {

public:

virtual void read(size_t offset, size_t n, char* buf);

virtual void write(size_t offset, size_t n, char* buf);

virtual void get_name();

}Secondly, Casey suggested adding a function for looking up the used device via the device map:

raw_device *find_raw_device(char *name) {

raw_device *device = global_device_map[name];

return device;

}Martin agreed, pointing out that the number of operations had been significantly mitigated.

Casey suggested imagining an API that would allow the code described above to respond to user requests.

Uncle Bob thought it would be a good idea to implement such an API as a linkable function that would provide access to delegator functions:

read(char* name, size_t offset, size_t n, char* buf);

write(char* name, size_t offset, size_t n, char* buf);Casey's proposed find_raw_device function will be used by these functions to delegate the request execution to a specific raw_device instance.

Robert offered to return from the hypothetical example to the topic it's devoted to. He pointed out that he didn't betray the clean code principles, and once again repeated his thought about switch:

Switch statements have their place. Dynamic polymorphism has its place. Dynamic things are more flexible than static things, so when you want that flexibility, and you can afford it, go dynamic. If you can't afford it, stay static.

Casey had another idea for how to implement the I/O interface with enum:

enum raw_device_operation : u32

{

RIO_none,

RIO_read,

RIO_write,

RIO_get_name,

RIO_private = 0x80000000,

};

struct raw_device_id

{

u32 ID;

};

struct raw_device_request

{

size_t Offset;

size_t Size;

void *Buffer;

raw_device_operation OP;

raw_device_id Device;

u64 Reserved64[4];

};

strcut raw_device_result

{

u32 error_code;

u32 Reserved32;

u64 Reserved64[7];

};Casey highlighted that this approach saves more programmers' time compared to using the clean code principle. Moreover, he pointed out two particular clean code points that waste a lot of time:

— You favor having one class for each type of thing (in this case a driver), with one virtual member function per operation that the thing does (in this case read, write, and get_name)

— Operation permutations are not supposed to pass through functions — so passing an enum to a function that says what to do is bad, you should have one function per thing that gets done. I've seen this mentioned multiple times — not just for switch statements, but also when you say functions with "if" statements on function parameters in them, that modifies what the function does, you're supposed to rewrite that as two functions.

Casey also made a case for the greater efficiency of using enum in the above example:

Uncle Bob agreed with the arguments, but he had a few reservations. He said that despite trying to prove a point, they're talking about the same thing.

We are two individuals on the same island, the only difference being that I wear a shirt emblazoned with Clean Code, and you wear one emblazoned with "Clean Code".

After going over a few details, Casey said he didn't see eye to eye with Robert Martin. Although, with further discussion, they might have come to the same conclusion.

This concluded the discussion between Casey Muratori and Robert Martin. The audience had a lot of fuel for thought as to who was right.

Since that discussion ended, I've seen a few opinions that the clean code is compromised and is no longer worth mentioning. It seems like this is completely off base, and the opposite is also wrong. Both discussants were still set on their stance but explained their own points of view.

I'd like to note that while reading the discussion, I felt that they misunderstood each other a bit at some points. For instance, when Casey highlighted the flaws in the inheritance hierarchies, Robert Martin never commented on them. Maybe it's all about the discussion format. I'm merely stating my feelings as an observer.

In a nutshell, the clean code concept is still important to me when writing code. It's essential to write it in such a way that it can be used and supported in the future. It's also worth thinking about the code performance. I guess you could say I'm a proponent of the selective productivity that Uncle Bob was talking about. Not every task requires us to squeeze maximum performance out of every line written. I'm exaggerating a bit, but I think you get my point. Even in Casey's article about shapes, I suppose that the effort spent to increase performance by as much as 15 times wasn't necessary.

In addition to my own thoughts, it'd be great to get input from other developers. That's why we'll come back to clean code and performance issues in the second part of the article.

0