Neural networks are making their way into our daily lives, trying to make them better. ChatGPT has recently sparked a frenzy of online debate. It can write texts, code, essays and songs. What is more, the chatbot can search for errors in code, but... how well does ChatGPT can do it?

Let's see how effectively ChatGPT can find errors in code. For testing the chatbot we will use code snippets from the PVS-Studio static analyzer documentation. The documentation for each diagnostic rule includes samples of correct and incorrect (causing PVS-Studio to issue a warning) code. We will provide a link to the corresponding documentation page in each example. We will also put ChatGPT through its paces on a few real-world project examples.

Although the article mainly focuses on the C# code, developers who write code in other programming languages may also find the topic under discussion interesting.

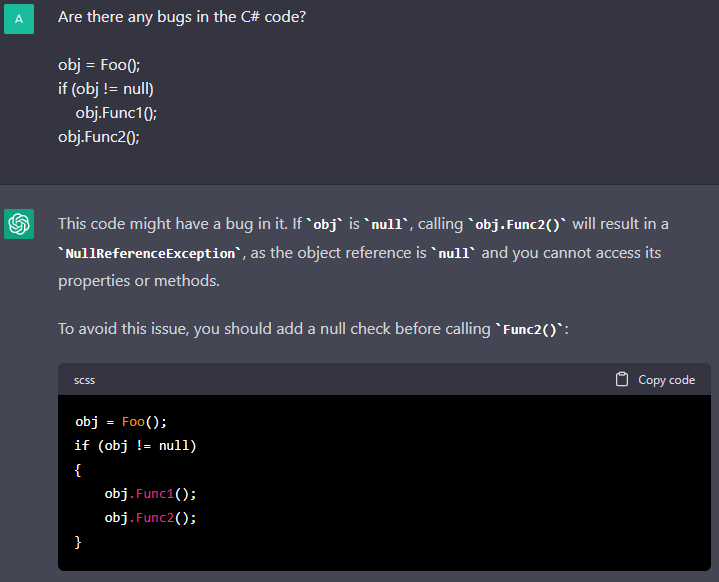

Let's start with the NullReferenceException, which is all too familiar to C# developers.

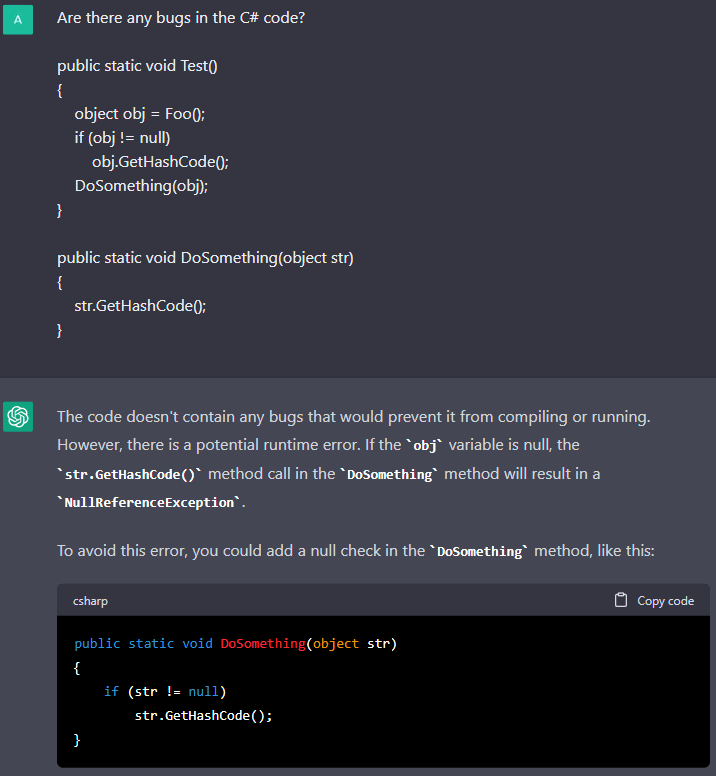

The V3125 diagnostic. In this example, ChatGPT managed to find an error in this small code fragment. The chatbot caught that obj.Func2() should be placed in the obj check for null. Let's make this task a little more complicated by adding interprocedural analysis to it.

Well, ChatGPT handled it again. Impressive. I should note that PVS-Studio also issues a warning on this code.

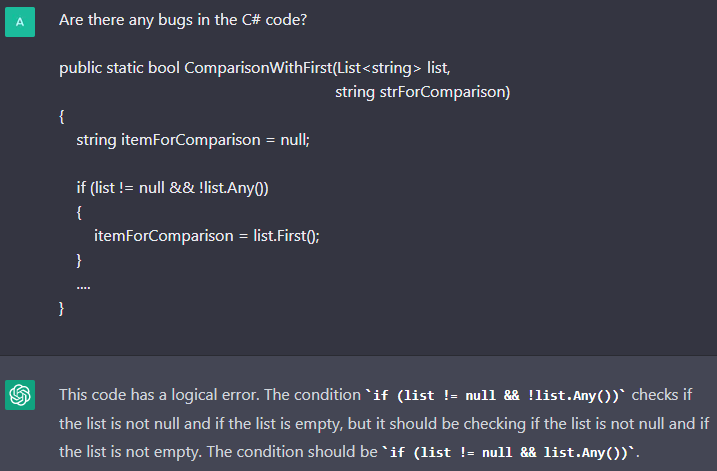

Here is another example:

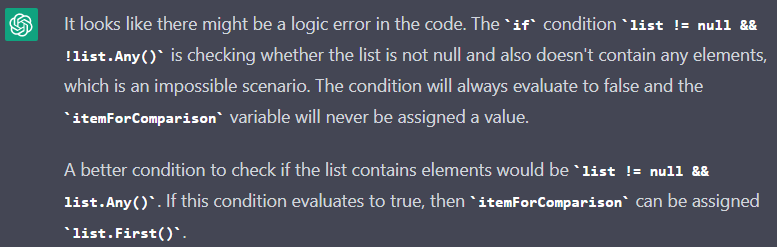

The V3179 diagnostic. The chatbot found an error in this code fragment too, but did not report that an exception would be thrown. Sometimes ChatGPT would respond as follows:

In this case, ChatGPT says that the if condition is always false and the itemForComparison variable will never be assigned a value. In fact, any list can be used in a method, so the chatbot reached the wrong conclusions.

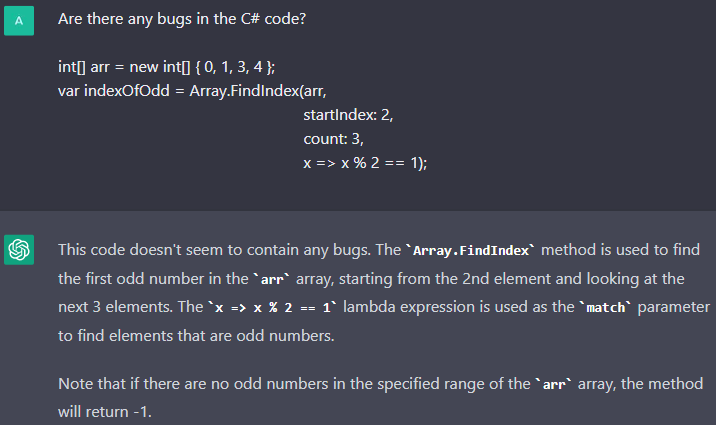

ChatGPT sometimes misses even simple errors, but these errors may not be obvious to everyone. Here is the case:

The V3186 diagnostic. Here the chatbot did not find the error. The arguments passed to the method are incorrect. There are 4 elements in the array, the start index is 2, the number of elements to search is 3. This way we get the array index out of bounds.

Maybe ChatGPT is unable to detect the error because it is uncommon, and thus the chatbot lacks the necessary information for training. However, the fact that this error is uncommon does not mean that it cannot be made and, therefore, that it should not be looked for.

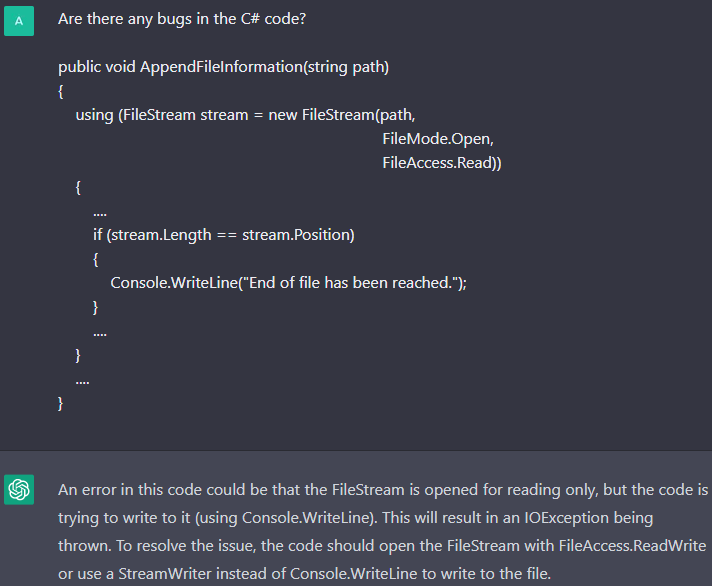

Sometimes ChatGPT reports an error that does not actually exist.

The V3178 diagnostic. We gave the chatbot a correct code snippet and it found a non-existent error in it. It reports that Console.WriteLine is used to write to a file, and that is a problem. Actually, there is nothing like that in this code.

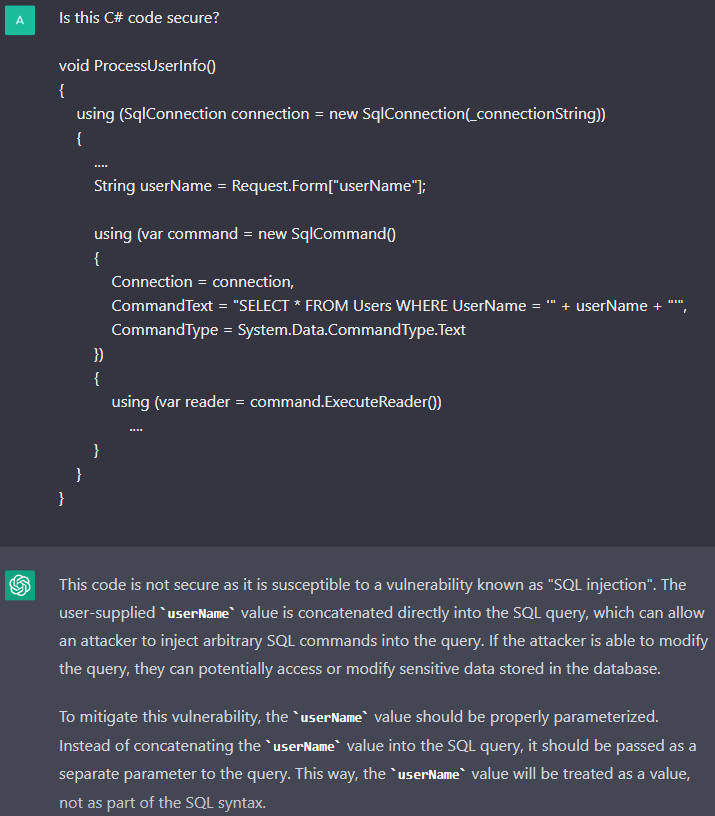

PVS-Studio checks code for potential vulnerabilities. Can ChatGPT do it? Let's use the chatbot to find the SQL injection vulnerability.

The V5608 diagnostic. ChatGPT managed to identify the vulnerability. The chatbot even recommended to use parameterized queries in order to prevent the SQL injection.

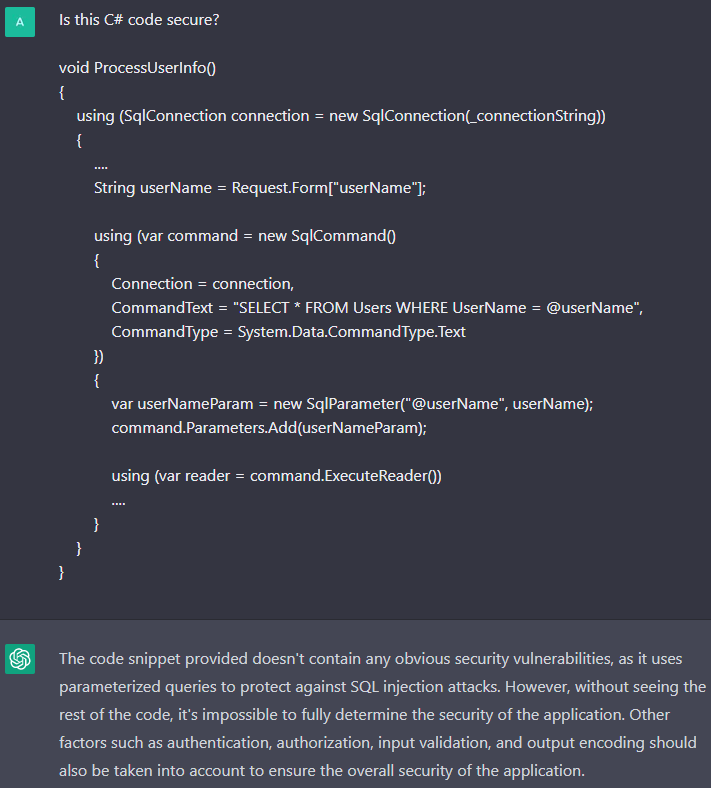

We will now use ChatGPT to analyze code that has already been protected against the vulnerability.

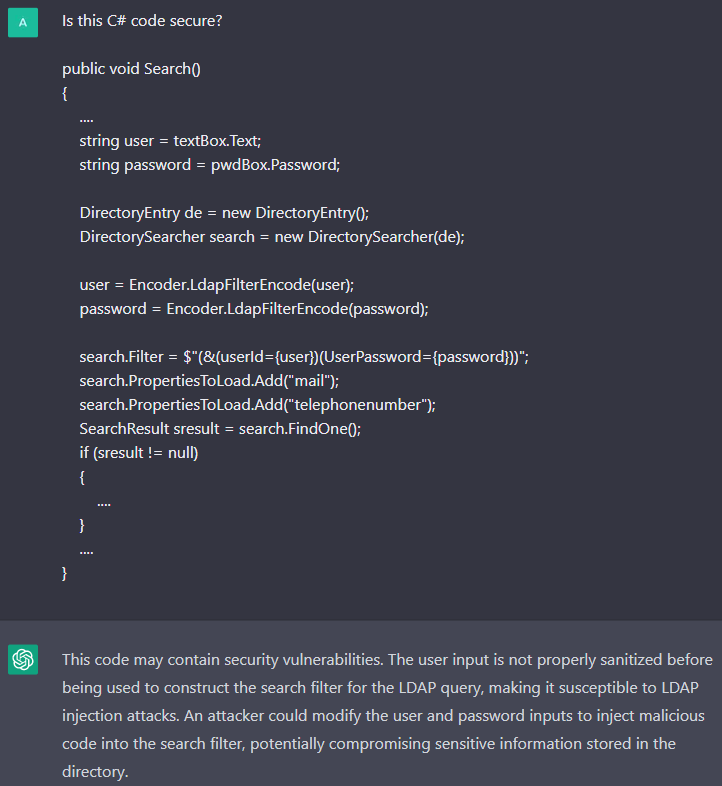

The chatbot assumed that this code is safe, and it really is. However, ChatGPT does not always identify the correct code as correct. Look at the code below, which has previously been protected against the LDAP injection.

The V5620 diagnostic. Hmm, ChatGPT found an LDAP injection attack. The chatbot says that user input is not checked or encoded. This, however, is not the case – we called the Encoder.LdapFilterEncode method, which encodes external data.

So far, we have been testing AI on synthetic code. What would happen if we tested it on a real project? Last year we published an article about XXE vulnerabilities: "Vulnerabilities due to XML files processing: XXE in C# applications in theory and in practice". The article had an example of a reproducible vulnerability in the BlogEngine.NET project. This vulnerability can be found with the PVS-Studio analyzer.

Let's take the code snippet from this article and send it to ChatGPT so the chatbot can check it for bugs.

public XMLRPCRequest(HttpContext input)

{

var inputXml = ParseRequest(input);

// LogMetaWeblogCall(inputXml);

this.LoadXmlRequest(inputXml); // Loads Method Call

// and Associated Variables

}

private static string ParseRequest(HttpContext context)

{

var buffer = new byte[context.Request.InputStream.Length];

context.Request.InputStream.Position = 0;

context.Request.InputStream.Read(buffer, 0, buffer.Length);

return Encoding.UTF8.GetString(buffer);

}

private void LoadXmlRequest(string xml)

{

var request = new XmlDocument();

try

{

if (!(xml.StartsWith("<?xml") || xml.StartsWith("<method")))

{

xml = xml.Substring(xml.IndexOf("<?xml"));

}

request.LoadXml(xml);

}

catch (Exception ex)

{

throw new MetaWeblogException("01",

$"Invalid XMLRPC Request. ({ex.Message})");

}

....

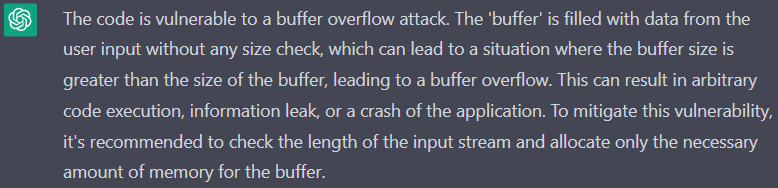

}When we asked the chatbot to find a vulnerability in this code, the ChatGPT analyzer suggested that there was a buffer overflow vulnerability.

In fact, the buffer array size is exactly the right one that is required for the stream content. In theory, the situation when we catch the System.OverflowException exception is possible. It may happen when allocating memory for an array, if the length of the stream is greater than the maximum size of the array. In practice, when trying to receive an input stream, the System.Web.HttpException exception will be thrown with the message: "Maximum request length exceeded". Then this exception will be handled in a certain way. Memory will not be allocated for the array.

After that, we asked ChatGPT to find other vulnerabilities in this code.

The chatbot responded in a vague manner that vulnerabilities may occur in programs and that it is necessary to conduct regular security reviews to identify these potential vulnerabilities. Hmm, I cannot but agree, but that wasn't the answer I was expecting.

For this code, PVS-Studio issues the following warning:

V5614 [CWE-611, OWASP-5.5.2] Potential XXE vulnerability inside method. Insecure XML parser is used to process potentially tainted data from the first argument: 'inputXml'. BlogEngine.Core XMLRPCRequest.cs 41

It's worth saying that my colleague managed to find this vulnerability using ChatGPT. That shows how the variability of chatbot responses can affect the results of the testing.

Note. A tool which produces results by chance is not a good choice for a developer. Imagine, you slightly fixed the code and the warning vanished. What does it mean? Have you fixed an error? Or is the error not being reported now?

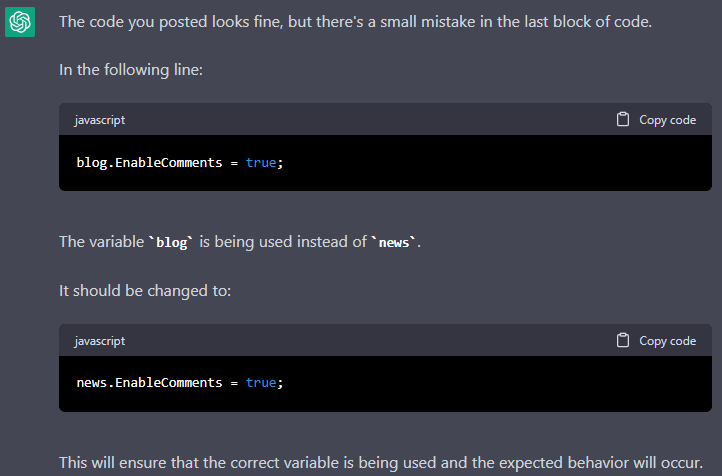

What about copy-paste errors? How does the chatbot handle them? Here's an example of an error from the article on how we used PVS-Studio to detect errors in ASP.NET Core projects. The Piranha CMS project's copy-paste error was ranked seventh in the error rating.

public override async Task InitializeAsync()

{

using (var api = CreateApi())

{

....

// Add archive

var blog = await BlogArchive.CreateAsync(api);

blog.Id = BLOG_ID;

blog.SiteId = SITE_ID;

blog.Title = "Blog";

blog.EnableComments = true;

blog.Published = DateTime.Now;

await api.Pages.SaveAsync(blog);

var news = await BlogArchive.CreateAsync(api);

news.Id = NEWS_ID;

news.SiteId = SITE_ID;

news.Title = "News";

blog.EnableComments = true; // <=

news.Published = DateTime.Now;

await api.Pages.SaveAsync(news);

....

}

}

Before we sum up, I'd like to share some thoughts about AI.

There's an issue that concerns the safety of using artificial intelligence systems. The source code is to be sent somewhere. Today you send your code to the AI system, and tomorrow someone gets your code as an answer to their question. Your code, which contains all the vulnerabilities of your project or company product. Amazon and Microsoft employees have already been asked not to share confidential information with ChatGPT.

Also, any data that AI generates in response should be double-checked. It's good when someone is familiar with the subject and can evaluate the accuracy of the information provided. However, someone unfamiliar with the subject may take the AI's incorrect responses at face value. If a user does not check the information provided, it may result in a number of problems mistakes, and even human casualties (in some areas).

Nonetheless, I was greatly surprised by ChatGPT's capabilities. We once asked the chatbot to find an error in the article's first example and then generate the results in the SARIF format, just for the lulz, of course. There were no words to describe our surprise when ChatGPT actually did it.

I believe that these AI models will not replace static analysis, but can enhance it. AI can be used to:

The developer's extensive knowledge and experience, however, cannot be replaced.

I believe that ChatGPT can be a useful tool and a starting point for further work. The chatbot, for example, can be used to write a response to a client, write a small note, or even a small fragment for this article :).

Of course, in this article we compared the PVS-Studio static analyzer and ChatGPT more for human curiosity and entertainment than for actual research. Obviously, ChatGPT has limited amount of data about the program being checked. The static analyzer has an easier task because it is given a complete code representation as well as various types of semantic information. In general, the static analyzer has more data for analysis. This helps the analyzer identify some pretty complicated issues that are not always obvious at first glance.

Moreover, searching for errors involves more than just the analysis and issuing warnings, it also involves the infrastructure that goes into the analyzer. IDE plugins that allow quick navigation and filtering; the CI; the support for game engines; the simplicity of use in legacy projects and many other features that are already integrated into the analyzer. For example, it is not that easy to prove the chatbot wrong (it may still disagree), while in the plugin you can simply mark any warning as false.

Thank you for reading, and I hope this article sated your curiosity :). For those who interested in this topic, I also suggest reading the article "Machine learning in static analysis of program source code". The article describes the nuances of combining artificial intelligence and static analysis methodology. For now, the arguments given in the article are still valid. In comparison to traditional static code analyzers, using AI for bug and vulnerability detection seems to be more like playing a game.

Goodbye to all the readers of the article! Thank you for taking the time to read this. I hope the information provided was helpful and informative. If you have any further questions, feel free to ask. Have a great day!

– ChatGPT

0