Automated UI tests are something that even experienced programmers are wary of. At the same time, the technology for this type of tests is nothing extraordinary and in the case of Visual Studio Coded UI Tests is implemented as an extension of the integrated unit-testing framework Visual Studio Team Test. In this article, I'd like to talk about UI testing in general and our own experience of using Visual Studio Coded UI Tests in the development of PVS-Studio in particular.

First of all, let's figure out why UI tests are not as popular with developers as, say, classic unit tests are.

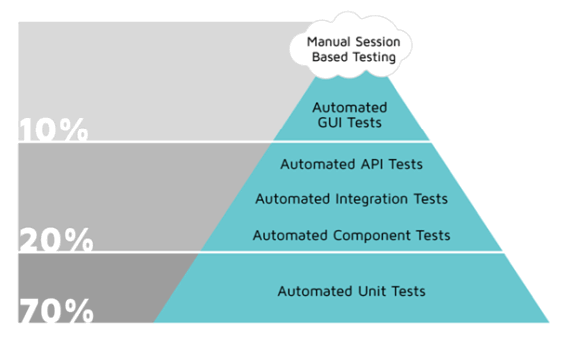

There are many of the so called "testing pyramids" to be found on the Internet, which show optimal test distribution across the application levels. They all look nearly the same and convey one common idea: keep as many of your tests as possible as close to the code as possible - and vice versa. Here's an example of one such pyramid, which includes additional tips on test distribution in percent.

Unit tests make the base of the pyramid. Indeed, they are easier to make at the development phase and take very little time to complete. Opposed to them, at the top of the pyramid, are automated UI tests. You don't want to have many of them as they are difficult to create and take quite a while to complete. Besides, it's not always easy to choose the right person to assign the task of building these tests to since they are supposed to simulate user actions. This all has little to do with the application code, so developers don't take on this job willingly. To generate good automated UI tests without (or with little) programmer assistance, you need commercial software tools. With all these factors combined, programmers will typically content with a one-time manual test run for a new feature rather than build UI tests. Besides, UI tests are extremely costly not only at the development phase but also at all the next phases of the application life cycle. Even a slight change in the UI may break lots of tests and force you to fix them.

I'd like to note that at present, our test system meets most of the recommendations. The number of automated GUI tests (45) makes about one tenth of the total number of tests for PVS-Studio. We don't have many unit tests, though, but they are complemented by lots of other test systems:

In the beginning, the PVS-Studio analyzer was an application for detecting bugs specific to the porting of C/C++ code to 64-bit platforms. It actually had an entirely different name, too: Viva64. The analyzer's development history is told in detail in the article "PVS-Studio project - 10 years of failures and successes". Once integrated into Visual Studio 2005 as a plugin, PVS-Studio acquired a graphical user interface, which was in fact the interface of the Visual Studio IDE itself with an additional menu to access the analyzer's functionality. That menu consisted of just two or three commands, so there wasn't much to test. Well, Visual Studio didn't have any tool set for GUI testing back then anyway.

Everything changed with the release of Visual Studio 2010, which got an integrated UI-test development system known as Visual Studio Coded UI Tests (CUIT). Based on the unit-testing system Visual Studio Team Test, CUIT first relied on the MSAA (Microsoft Active Accessibility) technology to access the UI controls. It has evolved since then and is now a full-fledged UI automation model for code testing, UIA (UI Automation). It provides the testing system with access to the public fields (object name, class' internal name, object's current state and place in the UI hierarchy, and so forth) of COM and .NET UI controls, while the system enables the programmer to simulate user actions on these controls through the standard input devices (mouse and keyboard). When ran right "out-of-the-box", CUIT supports recording of user actions (just like macros in Visual Studio) and automated UI mapping (i.e. gathering controls' properties and search/access parameters) along with automated control-code generation. You can then easily modify and maintain this data and create your own test sequences with just a little programming skill.

What's more, as I've already said, you can know nothing about programming at all and still make complex smart UI tests, given that you are using special commercial tools. And if you don't want to use proprietary testing environments or can't afford them, there are loads of free products and frameworks to choose from. Visual Studio Coded UI Tests is an intermediate solution allowing you not only to build almost entirely automated UI tests but also write your own test sequences in C# or VB.

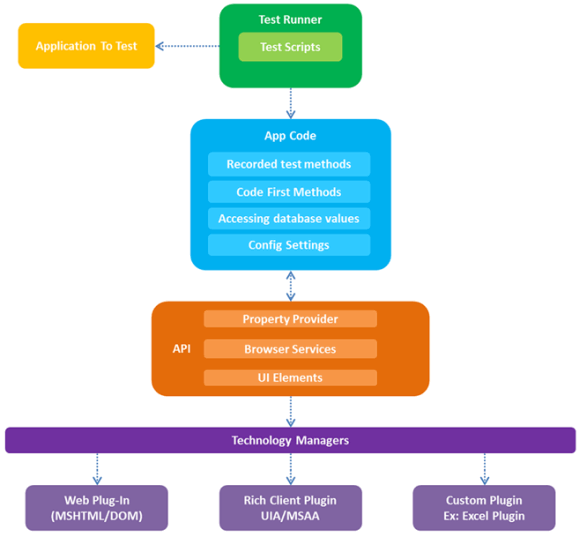

All of this greatly reduces the cost of making and maintaining GUI tests. The framework is quite straightforward and is outlined in the following chart:

Note the so called "interface adapters" at the lowest abstraction level, which are the ones of the key components. This layer interacts with the end UI controls and can be extended with additional adapters for more functionality. The next layer abstracts the GUI access technologies from the rest of the code that consists of the access APIs and the testing application's code itself, which includes all the necessary components to automate the testing. The technology can be extended by adding components to each level as permitted by the framework.

The basic features of CUIT by Microsoft are as follows:

Despite a number of problems, there are some more reasons why CUIT is preferable:

I won't go further into the technicalities of Visual Studio Coded UI Tests since the documentation covers them all in detail. It also offers step-by-step guides on creating simple UI tests based on this system. Oh, and CUIT isn't free: you need to have Visual Studio Enterprise installed to be able to use it.

It's not the only technology available on the market; there are a number of other solutions as well. The alternatives can be free or commercial, and the price doesn't necessarily determine the choice. For example, you may prefer a solution that allows you to build tests without coding them, but such tests may lack in flexibility. Another thing to consider is whether the solution supports your testing environment, i.e. operating systems and applications. Finally, the specifics of the application and its interface may also affect your choice. Here are some popular GUI-testing systems and technologies.

Commercial: TestComplete (SmartBear), Unified Functional Testing (Micro Focus), Squish (froglogic), Automated Testing Tools (Ranorex), Eggplant Functional (eggplant), etc.

Free: AutoIt (windows), Selenium (web), Katalon Studio (web, mobile), Sahi (web), Robot Framework (web), LDTP (Linux Desktop Testing Project), Open source frameworks: TestStack.White + UIAutomationVerify, .NET Windows automation library, etc.

This list is, of course, incomplete, but you have surely noticed that the free solutions are typically focused on specific operating systems or testing technologies. As a rule of thumb, commercial solutions offer more flexibility to meet your specific requirements, make tests easier to develop and maintain, and support more testing environments.

As for us, we didn't have to choose: once Visual Studio 2010 and its Coded UI Tests extension were released, it allowed us to easily add a suite of functional tests for the PVS-Studio plugin's UI to our testing environment.

So, we've been using GUI tests for over 6 years now. The original UI-test suite in Visual Studio 2010 was based on the only technology available at the time, MSAA (Microsoft Active Accessibility). With the release of Visual Studio 2012, MSAA has greatly evolved and is now known as UIA (UI Automation). We decided to switch to UIA but keep the MSAA-based tests for the Visual Studio 2010 version of our plugin (we keep plugins for all Visual Studio versions starting with Visual Studio 2010).

As a result, we got two "branches" of UI tests. In the test project, both branches were sharing the UI map and the code. This is how it was implemented (the method resets Visual Studio settings before running the test):

public void ResetVSSettings(TestingMode mode)

{

....

#region MSAA Mode

if (mode == TestingMode.MSAA)

{

....

return;

}

#endregion

//UIA Mode

....

}Every time we made changes to the plugin's interface, we had to accordingly modify both branches of the tests, while adding a new feature would force us to duplicate the UI control on the map - that is, create two different controls for the MSAA and UIA modes. It all took a lot of effort - not only to create or modify a test but also keep the testing environment stable. This last aspect needs more explanation.

I have noticed that the testing environment's stability is a matter of great concern when testing the GUI. The main reason is that GUI tests are heavily dependent on the set of external factors because they, in fact, simulate user actions, such as pressing keys, moving the mouse pointer, mouse clicks, and so forth. A lot of things can "go wrong" here. For example, someone may be using the keyboard connected to the test server while the test is running; or the screen resolution may turn out to be too small to display some control, and the testing environment wouldn't see it.

Expectations:

Reality:

A carelessly tuned (and gone missing later) UI map control is perhaps the leading type of faults. For example, when the UI-mapper of Visual Studio CUIT creates a new control, it sets the "Equals" search criterion for its properties by default. It means that the property names must match the specified values exactly. It's usually justified, but sometimes tests can be made much more stable by using a less strict search criterion "Contains" rather than "Equals". This is just one example of "fine tuning" in UI testing.

Finally, some of your tests may simulate an action followed by waiting for some delayed result like displaying a window. In addition to the control search problem, you'd also have to suspend the playback for some time until the window appears and then sync with it. Some of these problems can be solved with the framework's standard methods (WaitForControlExist and the like), while others require you to invent some tricky algorithms.

We faced such problem while making a test for our plugin. In this test, we open an empty Visual Studio environment, load a test solution, run a check on it (menu command "PVS-Studio" -> "Check" -> "Solution") and wait for check to finish. The problem was how to capture that moment. Analysis time may vary depending on the circumstances, so the standard timeouts wouldn't help. Nor could we use the standard test thread suspension means and wait for some control to appear (or disappear) since we had no control to sync with. As the check is running, a progress window appears, but the user could hide it and the check would go on. So, we couldn't rely on that window either (besides, it has the "Keep open when check is over" option), and we wanted to make the algorithm as generic as possible to be able to use it in other tests where we checked projects and waited for the check to complete. We did find a way out. All the time the check is running, that very menu command "PVS-Studio" -> "Check" -> "Solution" remains inactive. We just had to set an interval to check on this command's "Enabled" property (through a UI-map object): once it became active, we would know the check was over.

So, when you do UI testing, you can't just create tests; you have to carefully fine-tune in every case. You must understand and know how to sync all the actions in a sequence. For example, you must remember that a context-menu command won't be found until the menu is displayed, and so forth. You must also carefully prepare the testing environment. Only then can you expect your tests to be stable and produce reliable results.

As I already mentioned, we've been improving our UI-test system since 2010. Over this time, we have created a few dozens of test sequences and written tons of auxiliary code. As the standalone version appeared, we added tests for it as well. By that time, the old branch of tests for the Visual Studio 2010 plugin had become irrelevant and abandoned. However, we couldn't just take that "dead" code out of the project. Firstly, as I showed above, that code was tightly integrated into the test methods. Secondly, more than half of the controls of the existing UI map relied on the old technology MSAA but were reused (rather than duplicated) in many new tests along with the UIA-controls (it was possible thanks to technological continuity). The "old" controls were also linked to a large amount of automatically generated code and test methods.

By the fall of 2017, we had reached the point where we needed to revise our UI-test system. The tests worked well most of the time, but some would crash every now and then for unknown reasons. Well, the primary reason was that control search was too troublesome. For each control, we had to traverse the UI-map tree until we reached that control and check its search criteria and other settings. Resetting those settings before running the test would help sometimes. With such a big (and largely redundant) UI map and the amount of "dead" code, that process was taking too much time and effort.

The task was waiting for a hero to tackle it until I was assigned it.

I'll spare you the details and note only that it wasn't hard work - it just required a lot of patience and attention. It took me about two weeks, from start to finish. Refactoring the code and UI map took half that time. The rest of it was spent on stabilizing the tests, which largely came down to fine-tuning the UI-control search criteria (i.e. revising the UI map), as well as some code optimization.

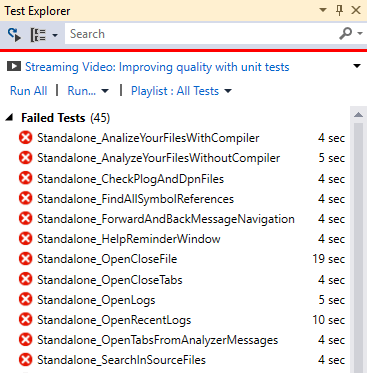

As a result, I managed to reduce the code of the test methods by about 30% and the number of UI-map controls by half. But the greatest achievement was making UI tests more stable and less demanding. We still get crashes, but now they have mainly to do with changes to the analyzer's functionality or with mismatches (errors). But that's exactly why you need a UI-test system.

So, our current automated test system for PVS-Studio's UI can be summed up as follows:

Before we finish, I'd like to share with you a list of practices that help create good automated GUI tests. This list is based on our own experience of using this testing technology (some of the practices are also applicable to other testing methods, such as unit tests).

Choose a suitable tool set. Choose the environment, where you will be building and running CUIT, according to the specifics of your application and testing environment. You don't necessarily have to go with commercial solutions, but they usually help solve the task in a very effective and efficient way.

Tune the infrastructure properly. Make sure you spend enough resources on UI mapping. Make it easier for the framework to search for controls by thoroughly describing all their properties and specifying smart search criteria. Provide for further modifications.

Minimize manual work. Wherever possible, do use automated code-generation and sequence-recording tools. They will help significantly speed up the development process and minimize the risk of errors (it's not always easy to find the cause of a crashed test, especially when the mistake is hiding in the framework-handling code).

Make simple and independent smart tests. The simpler your tests, the better. Try to create an individual test case for each control and scenario. You also want to keep your tests independent from each other. One crashed test shouldn't affect the whole process.

Choose clear names. Use prefixes in the names of tests of the same pattern. Many environments support filtering by name. Group tests whenever possible, too.

Isolate your test execution environment. Run tests on a dedicated server with as few interactions from outside as possible. Disable all the external input devices; provide the required screen resolution or use a dummy plug to simulate a connection to a high-resolution monitor. When running tests, make sure there are no other applications sitting in the background and potentially interacting with, say, the desktop and displaying messages. Carefully schedule test runs and assume they will take the longest time possible.

Analyze the reports. Have your reports output in a straightforward form. Use continuous integration systems to manage the tests and promptly get and analyze the test results.

0