History is experience that helps the modern generation not to make the same mistakes again. But in programming, as well as in other developing areas, such an ideal scenario is not always possible. Why? Because new languages are still appearing, a lot of processes are becoming more complex, and the machines are getting smarter. In this article, I'll tell you two real stories. What do they have in common? First of all, the time - both of them happened in the USSR. Secondly, the people. These stories could have totally different scenarios if the main characters revealed their best or worst traits. Thirdly, the programming of course, otherwise this article would not be in our blog.

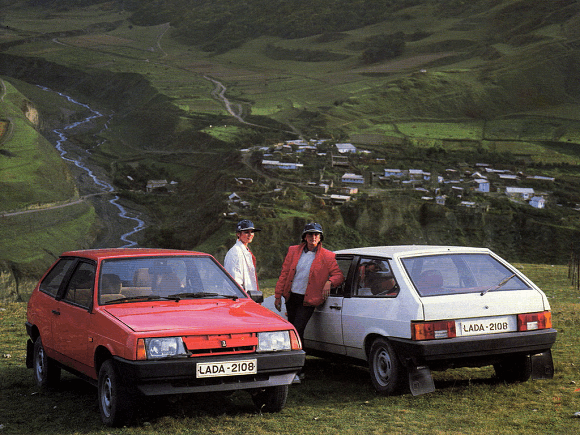

The Russian domestic auto industry often gets attacked by the media. Having been an active user of it for some time, I cannot help agreeing with it: the quality of our cars could be better. I am not going to to speculate on the causes of such a situation - I don't have the necessary competence - I'll just tell a story about a bug in AvtoVAZ (Volzhsky Avtomobilny Zavod or Volga Automobile Plant).

It has already been published on various sites, so I will tell it briefly. In the year 1983, a talented mathematician, Murat Urtembayev, was given a job at AvtoVAZ. This young specialist was full of enthusiasm, but the chief officers weren't very encouraging to the new specialist, and gave him an ordinary position.

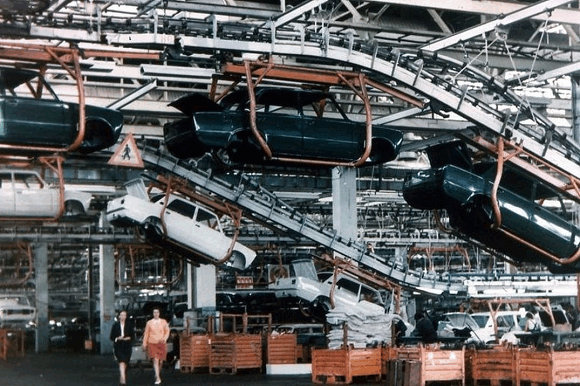

Then he decided to prove that he was a great programmer, and deserved some respect (a higher appointment, holidays in a spa, a good salary, etc.). His plan was such: he wrote a patch for a counter-program that is responsible for the cyclical rhythm of nodes provision to the conveyor line. The patch would cause a failure to the automatic equipment, and the necessary details wouldn't come at the right time, resulting in chaos on the production line. Right at that moment, Murat would volunteer to fix this issue, and show his bosses the unique abilities of their new, undervalued employee.

But something went wrong. It was an easy task to insert the floppy disk with a virus. The patch was supposed to start working right on the first day after his vacation, which would give him an additional alibi. Finally, he could heroically save AvtoVAZ. But apparently, Murat really was not a very good programmer, because the equipment started glitching 2 days before D-Day. The parts were getting to the conveyor in the wrong order and at the wrong time. Engineers were frantically searching for the technical error; the possibility of a bug in the code occurred to them only later. The incorrect code fragment was found, but the program was still glitching.

In the end, either conscience or vanity forced Murat Urtembaev to confess. The programmer was convicted of hooliganism, given a suspended sentence, and was ordered to reimburse the cost of two "Zhiguli" cars.

This story was also widely known, although not all of the facts are available to the general public. Why? Because it's the story of how a nuclear war almost started. September 1983 -the world situation cannot be called 'very favorable'. Reagan, having the post of President of the United States, openly names the SOVIET UNION an "Evil empire". Any provocative action from either one of the parties could lead to the rupture of the stretched string of fragile peace, and the beginning of the war.

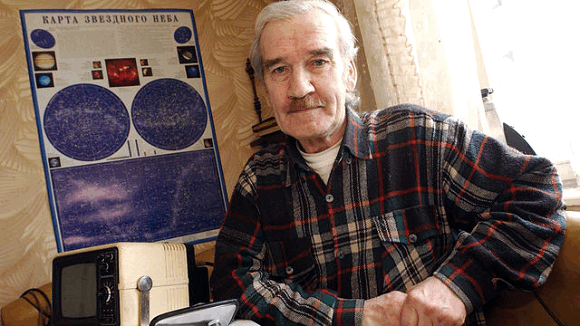

100 km away from Moscow, a Soviet military officer, Stanislav Yevgrafovich Petrov, started the night operational shift in the command post, 'Serpukhov-15'. The Lieutenant Colonel was personally monitoring the situation displayed on the screen by satellites.

The surveillance area wasUS territory. Suddenly, a warning appeared on the screen... The United States launched a missile! The siren started howling, the system began an automatic check - everything was correct, there is no mistake! Petrov had to make a well-thought out decision. There were two options:

The data from Petrov's computer was visible to the superior officers, who were at a loss.

Why is Stanislav Yevgrafovich hesitating, and not confirming the attack? They phoned him. The Lieutenant Colonel reported: "The information is false".

Petrov's intuition and experience hadn't failed him. Later, it was proven - the system glitch occurred due to the influence of external factors, which were previously not taken into account: the sensors were affected by the sunlight reflected fromhigh-altitude clouds (source). The computer didn't manage to detect a false signal.

Petrov's act had quite an ambiguous reaction. The USSR respected bureaucracy, and Stanislav Yevgrafovich behaved in contradiction to it. Logically, he should be punished. On the other hand, everybody was well aware that if he had pushed that alarm button, the USSR would have launched the missiles. And this time these would be real rockets, not just erroneous pixels on a display. As a result, Petrov was given a verbal reprimand. Soon after, he left the Army; still as a Lieutenant Colonel.

His act was treated quite differently by the US. He was recognized as a hero and awarded a prize by the UN "in recognition of the part he played in averting a catastrophe." But now Stanislav Yevgrafovich Petrov is still living in Russia, in a simple apartment in Fryazino. He doesn't consider himself to be a hero, but willingly speaks about 1983.

If the biography of this Soviet Officer arouse interest, you may see a documentary "The Man Who Saved the World".

All modern industrial, and even more so military, installations have the required software installed. Almost all processes are automated, therefore the human presence becomes less necessary. But at the same time, the requirements for software quality increases. That's why it is highly recommended to use additional tools for the analysis of code, and I cannot help mentioning PVS-Studio analyzer. :)

During our work we should also do the analysis of the safety of our code, in particular, we must search for undeclared functions (software back doors) with specialized tools. This will prevent a situation similar to what happened with the AvtoVaz hacker.

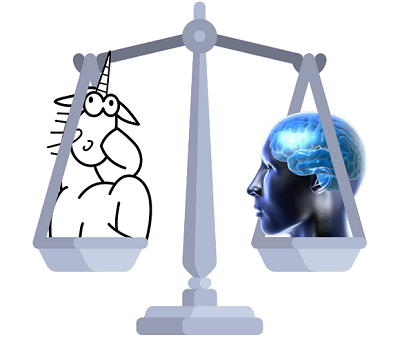

But, in conclusion, none of the programs have intuition, emotions, or free will. So can we really say that they make unbiased decisions? Can we fully trust artificial intelligence? Following the algorithm of the program, the USSR would have launched the missiles in 1983. And now, dear readers, I also suggest reflecting on the topic: "Can we trust a program in making an important decision, or should it be the responsibility of human beings?"

0