Hi everyone. Today I want to tell you about how we test our analyzers of C/C++ source code CppCat and PVS-Studio before releasing their new versions. If you are not familiar with this product line yet, I do recommend you to do it right now. No, I'm not trying to talk you into installing these wonderful tools; it's just that it will help you understand this article quicker and easier :-).

Unfortunately, we are no longer developing or supporting the CppCat static code analyzer. Please read here for details.

So, we currently have two tools to analyze the source code of C/C++ projects, each of them designed to point out potential issues in code. Every practicing developer has long been aware of the fact that it is impossible to avoid all the errors. There are many ways to resolve this issue and each developer team picks the one most suitable for the current project they are developing and/or maintaining. Of course, every approach has its own pros and cons - you just need to take the path of least effort and select the one that suits you best. Some would "cover" the whole code with tests and literally "drag" the new code along the way together with new tests, the development time ever increasing several times. Sure, if you have enough money and time for that, it is the best way, but it's not how things usually are in real life because neither project managers nor customers are willing to pay extra money, while trying to explain the benefit from this approach to people far from programming is like trying to reconcile a cat and a dog. But there exists a more sensible approach: TDD (Test-Driven Development), when tests are written in the first place and the code is written after that. This way you can make sure you will test only what you need. The fact that certain tests never fail always implies there are too many of them. Thinking it over for a while will get you to the conclusion that libraries and your own development frameworks are best matched with unit testing, while business logic is best matched with integration testing. It is the safest and most painless approach both for developers and customers.

There are unfortunately certain exceptions to this rule and then you have to look for quite different methods to test your products. It happens when you have too many (or at least more than one) components that don't fit into a common architecture and can exist totally independently. It is just the case with CppCat and PVS-Studio. We can, of course, launch debugging for Visual Studio experimental instance, but this approach won't reveal the whole picture for us; in other words, it doesn't suit us.

But we still need testing! That's why we have developed an isolated window application to allow us to make sure that our analyzers work absolutely correctly (Figure 1).

Notice that it is far not the only testing method we employ: three years ago we wrote about the methods we used when developing our analyzer.

The tester could only be applied to PVS-Studio until recently. It worked well and suited us quite well. When CppCat was created, we had to develop an extension for the tester so that it could work in two modes. Writing a separate Stand-Alone application specially for that purpose was not the best way out, so we had to integrate two working modes in one program. Notice that it's not an implementation of a new testing mode but just a modification of one of the many methods described in the article I referred to above.

Figure 1 - The tester's general interface

I invite you to take a closer look at the main features of the program's interface to get a better understanding of the whole process:

Figure 2 - Current launch settings

A drop-down list with the current tester launch settings (Figure 2) allows us to specify which launch modes we want to use at the moment. They all are stored in ordinary xml-files. We have a number of different launch modes: the settings customized for launching in Visual Studio 2010 IDE; the settings that only use the preprocessor and compiler with Visual C++; the default settings, and so on.

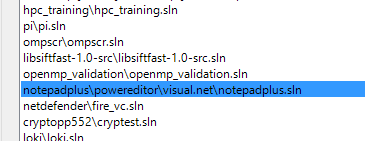

Figure 3 - Project list

The project list (Figure 3) option allows us to select among standard projects to test our analyzers on.

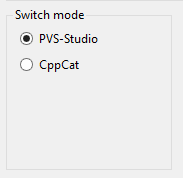

Figure 4 - Launch modes

It's all clear with the launch mode option (Figure 4): it is used to specify which of the analyzers we want to run tests on now.

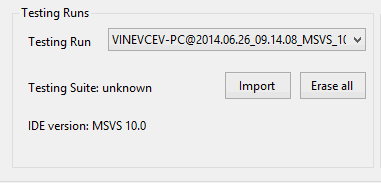

Figure 5 - Test launch chronology

Test launch chronology (Figure 5) allows us to select previous tests from the drop-down list and track what has changed since then.

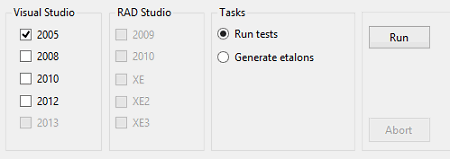

Figure 6 - Launch parameters

Launch parameters (Figure 6) are used to specify which IDE you want the analysis to run in. The option button in the Tasks area is used to specify what exactly we want to do - either run tests or generate etalons (about these read further).

To make sure that our analyzers work properly, we use the mechanism of comparing the currently generated diagnostics against etalon ones. Etalon diagnostics are the ones we use to compare with those currently generated. They are stored in ordinary xml-files. Differences revealed by this comparison indicate that the analyzer has failed the tests and we need to check the latest code modifications that could have led to its incorrect behavior.

Since the tester was initially designed for PVS-Studio and was not intended for extensions, we have been faced with a number of issues when integrating it with the other plugin. Without going deep into the details, I will just say that CppCat and PVS-Studio work differently - this is the reason why we find it more and more difficult to maintain the tester with every next launch.

In the nearest future, we are going to redesign the tester "from scratch", focusing on its usability and maximum possible independence from the analyzers' behavior. The purpose of this work is to make the internal testing tool more convenient to use and therefore to increase the quality of our tools for users.

0