A task solution can be paralleled at several levels. There are no definite boundaries between these levels, and it is difficult to refer a particular paralleling technology to any of them. The division given hereby is quite relative, and serves to demonstrate the diversity of approaches to the issue of paralleling.

Quite often, paralleling at this level is the most easy and the most efficient one. Such paralleling is possible in cases when the task being solved naturally consists of independent subtasks, each of which can be solved separately. A good example is compression of an audio album. Each recording can be processed separately, as it is not connected with other ones.

The operational system shows us paralleling at the level of tasks, starting programs on different processors on a multui-core machine. If the first program shows us a movie, and the second one is a P2P client, the operational system will easily arrange their parallel work.

Another example of paralleling at this abstraction level is parallel compilation of files in Visual Studio 2008 or data processing in batch modes.

As it was mentioned above, this type of paralleling is quite easy, and rather efficient in a number of cases. But if we deal with a uniform task, this type of paralleling will be not applicable. The operational system will not be able to speed up the program, which uses only one processor, whatever number of cores might be available at the moment. A program dividing the coding of sound and image in a video movie into two tasks will not get anything from the third or fourth core. In order to parallel uniform tasks, it is necessary to go one level down.

The name of model "data parallelism" comes from the fact that parallelism lies in the use of the same operation to a multitude of data elements. An archiver which uses several processor cores for archiving demonstrates data parallelism. Data is divided into blocks, which in a uniform way are processed (archived) on various units.

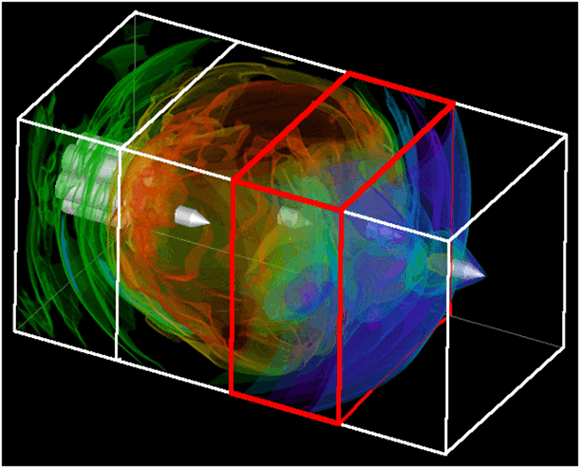

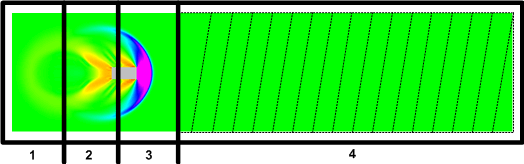

This type of paralleling is widely used in solving problems of computational modeling. The countable domain is presented as cells, which describe the environment condition in the referring points of space: pressure, density, gases percentage ratio, temperature, etc. The number of such cells can be enormous, millions and billions. Each of these sells must be processed in the same way. Here data paralleling model is very appropriate itself, as it allows to load each core allocating a certain set of cells for it. The countable domain is divided into geometrical objects, e.g., parallelepipeds, and cells included in this domain are given for processing to a certain core. In mathematical physics, such type of parallelism is called geometrical parallelism.

Though geometrical parallelism can seem alike paralleling at tasks level, it is more complex in its implementation. In the case of modeling tasks, it is necessary to transfer data obtained at the boundaries of geometrical areas to other cores. Quite often, special methods of calculation speed increase are used, due to loading balancing between calculation units.

In a number of algorithms, the calculation speed where active processes take place, takes more time than in the cases with quiet medium. As it is shown at the figure, dividing the countable domain into non equal parts, more uniform loading of cores can be achieved. Cores 1, 2, and 3 process small areas in which the body moves, while core 4 processes the large area which has not yet undergone disturbance. All this needs additional analysis and creation of balancing algorithm.

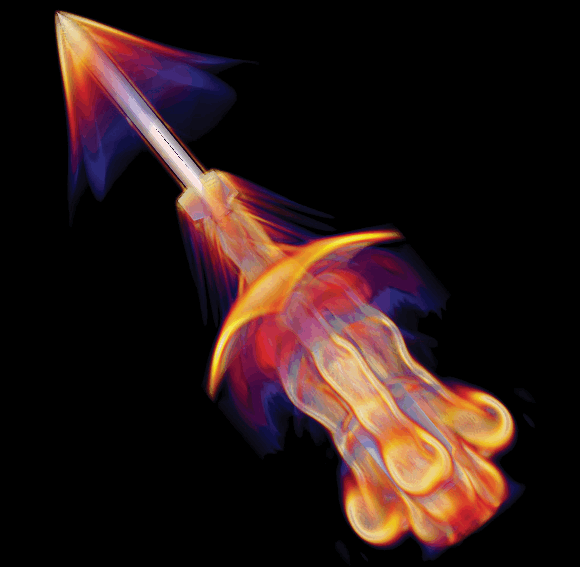

The prize for this complication is the ability to solve prolonged movement tasks in a reasonable calculation time. Consider the start of a rocket.

The next level is paralleling of separate procedures and algorithms. Algorithms of parallel sort, matrices multiplication, and systems of linear equations solution can be referred here. At this level of abstraction, it is suitable to use such parallel programming technology as OpenMP.

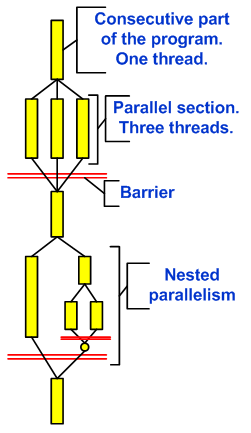

OpenMP (Open Multi-Processing) - is a set of compiler directives, library procedures and environment variables, which are intended for programming multithread applications on multiprocessor systems. In OpenMP, parallel execution mode "branching - merging" is used. OpenMP program starts as the only execution thread, called the initial thread. When the thread comes across a parallel construction, it creates a new group of threads consisting of itself and a number of additional threads, and becomes main in the new group. All the members of the new group (including the main one) execute the code inside the parallel construction. At the end of the parallel construction, there exists a non-explicit barrier. After the parallel construction, the execution of the user code is continued by the main thread only. Other parallel regions can be nested in the parallel region.

By means of the idea of "incremental paralleling", OpenMP best suits the developers who want to quickly parallel their calculating programs with large parallel cycles. The developer does not create a new parallel program but just consecutively adds text of a consecutive program of the OpenMP-directive.

The task of parallel algorithms implementation is rather complicated, that is why quite a large number of paralleling libraries exists, which allow to build programs like of bricks, without going into the arrangement of data parallel processing implementation.

This is the lowest level of parallelism carried out at the level of parallel processing of several instructions by the processor. At this level, there exists batch processing of several data elements by one command of the processor. It is referred to MMX, SSE, SSE2, etc. technologies. This type of parallelism is sometimes singled out into a deeper level of paralleling - at the bit level.

The program is a thread of instructions executed by the processor. The order of these instructions can be changed, they can be allocated by groups, which will be executed in a parallel way, without altering the result of the whole program work. This is called parallelism at the instructions level. To implement this type of parallelism, several command instruction pipelines are used in microprocessors, such technologies as command prediction and registers renaming.

The developer deals rarely with this level. There is no sense in it. The work on arrangement of commands in the most appropriate sequence for the processor is done by the compiler. This level of paralleling can be interesting for a small group of experts only, who get all the possibilities out of SSEx, or for compiler developers.

This text does not claim to be a complete paper on paralleling levels, it simply shows the diversity of the question of using multi-core systems. For those who are interested in software development, here are some links to sources on the parallel programming issues:

0