Lesson 26. Optimization of 64-bit programs

- Reducing amounts of memory being consumed

- Using memsize-types in address arithmetic

- Intrinsic-function

- Alignment

- Other means of performance enhancement

Reducing amounts of memory being consumed

When a program is compiled in the 64-bit mode, it starts consuming more memory than its 32-bit version. This increase often stays unnoticed, but sometimes memory consumption may grow twice. The growth of memory consumption is determined by the following factors:

- larger memory amounts to store some objects, for example pointers;

- changes of the rules of data alignment in structures;

- growth of stack memory consumption.

We can often tolerate the growth of main memory consumption - the advantage of 64-bit systems is that very large amount of memory available to user. It is quite okay if a program takes 300 Mbytes on a 32-bit system with 2 Gbytes of memory and 400 Mbytes on a 64-bit system with 8 Gbytes of memory. In relative units, it means that the program takes three times less memory available on a 64-bit system. So it is unreasonable to strike against the growth of memory consumption we have described - it is easier to add just a bit more memory.

But there is a disadvantage of this growth. It is related to performance loss. Although the 64-bit program code is faster, extraction of larger data amounts from memory might cancel all the advantages and even reduce performance. The operation of transferring data between the memory and microprocessor (cache) is not very cheap.

One of the ways to reduce the memory being consumed is optimization of data structures we have told you about in Lesson 23.

Another way of saving memory is to use more saving data types. For instance, if we need to store a lot of integer numbers and we know that their values will never exceed UINT_MAX, we may use the type "unsigned" instead of "size_t".

Using memsize-types in address arithmetic

Using ptrdiff_t and size_t types in address arithmetic might give you an additional performance gain along with making the code safer. For example, using the type int, whose size differs from the pointer's capacity, as an index results in additional commands of data conversion appearing in the binary code. We speak about a 64-bit code where the pointers' size is 64 bits while the size of int type remains the same - 32 bits.

It is not so easy to give a brief example to show that size_t is better than unsigned. To be impartial, we have to use the compiler's optimizing capabilities. But two variants of the optimized code often get too different to easily demonstrate their difference. We managed to create something like a simple example only with a sixth try. But the sample is still far from being ideal because it shows - instead of the unnecessary conversions of data types discussed above - the fact that the compiler can build a more efficient code when using size_t. Consider the program code arranging array items in the reverse order:

unsigned arraySize;

...

for (unsigned i = 0; i < arraySize / 2; i++)

{

float value = array[i];

array[i] = array[arraySize - i - 1];

array[arraySize - i - 1] = value;

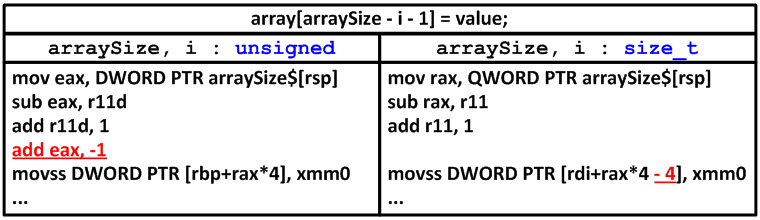

}The variables "arraySize" and "i" in the example have the type unsigned. You can easily replace it with size_t and compare a small fragment of assembler code shown in Figure 1.

Figure 1 - Comparing the 64-bit assembler code fragments using the types unsigned and size_t

The compiler managed to build a more laconic code when using 64-bit registers. We do not want to say that the code created using the type unsigned (text on the left) will be slower than the code using the type size_t (text on the right). It is a rather difficult task to compare the speed of code execution on contemporary processors. But you may see from the example that the compiler can build a briefer and faster code when using 64-bit types.

Now let us consider an example showing the advantages of the types ptrdiff_t and size_t from the viewpoint of performance. For the purposes of demonstration, we will take a simple algorithm of calculating the minimum path length. You may see the complete program code here.

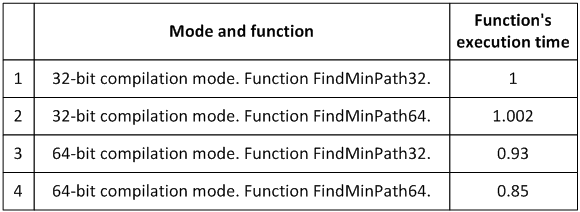

The function FindMinPath32 is written in classic 32-bit style with unsigned types. The function FindMinPath64 differs from it only in that way that all the unsigned types in it are replaced with size_t types. There are no other differences! I think you will agree that it cannot be considered a complex modification of the program. And now let us compare the execution speeds of these two functions (Table 1).

Table 1 - The time of executing the functions FindMinPath32 and FindMinPath64

Table 1 shows reduced time relative to the speed of execution of the function FindMinPath32 on a 32-bit system. It was done for the purposes of clearness.

The operation time of the function FindMinPath32 in the first line is 1 on a 32-bit system. It is explained by the fact that we took this time as a unit of measurement.

In the second line, we see that the operation time of the function FindMinPath64 is also 1 on a 32-bit system. No wonder, because the type unsigned coincides with the type size_t on a 32-bit system, and there is no difference between the functions FindMinPath32 and FindMinPath64. A small deviation (1.002) only indicates a small error in measurements.

In the third line, we see a performance gain of 7%. We could well expect this result after recompiling the code for a 64-bit system.

The fourth line is of the most interest for us. The performance gain is 15%. It means that by merely using the type size_t instead of unsigned we let the compiler build a more effective code that works even 8% faster!

It is a simple and obvious example of how data that are not equal to the size of the machine word slow down algorithm performance. Mere replacement of the types int and unsigned with ptrdiff_t and size_t may result in a significant performance gain. It concerns first of all those cases when these data types are used to index arrays, in address arithmetic and to arrange loops.

Note. Although the static analyzer PVS-Studio is not specially designed to optimize programs, it may assist you in code refactoring and therefore make the code more efficient. For example, you will use memsize-types when fixing potential errors related to address arithmetic, and therefore allow the compiler to build a more optimized code.

Intrinsic-function

Intrinsic-functions are special system-dependent functions that perform those actions which cannot be performed at the level of C/C++ code or that perform these functions much more effectively. Actually, they let you get rid of inline-assembler because it is often undesirable or impossible to use it.

Programs may use intrinsic-functions to create faster code due to absence of overhead expenses on calling common functions. The code size will be a bit larger of course. MSDN gives a list of functions that can be replaced with their intrinsic-versions. For example, these are memcpy, strcmp, etc.

The compiler Microsoft Visual C++ has a special option "/Oi" that lets you automatically replace the calls of some functions with their intrinsic-analogs.

Besides automatic replacement of common functions with their intrinsic-versions, you may use intrinsic-functions explicitly in your code. This might be helpful due to these factors:

- Inline assembler is not supported by the compiler Visual C++ in the 64-bit mode while intrinsic-code is.

- Intrinsic-functions are simpler to use as they do not require knowledge of registers and other similar low-level constructs.

- Intrinsic-functions are updated in compilers while assembler code must be updated manually.

- The built-in optimizer does not work with assembler code.

- Intrinsic-code is easier to port than assembler code.

Using intrinsic-functions in automatic mode (with the help of the compiler switch) will let you get some free percent of performance gain, and "manual" use even more. That is why using intrinsic-functions is quite reasonable.

To know more about using intrinsic-functions, see the Visual C++ team's blog.

Alignment

It is good in some cases to help the compiler by defining the alignment manually to enhance performance. For example, SSE data must be aligned on a 16-byte boundary. You may do this in the following way:

// 16-byte aligned data

__declspec(align(16)) double init_val[2] = {3.14, 3.14};

// SSE2 movapd instruction

_m128d vector_var = __mm_load_pd(init_val);The sources "Porting and Optimizing Multimedia Codecs for AMD64 architecture on Microsoft Windows", "Porting and Optimizing Applications on 64-bit Windows for AMD64 Architecture" cover these issues very thoroughly.

Other means of performance enhancement

To learn more about the issues of optimizing 64-bit applications, see the document "Software Optimization Guide for AMD64 Processors".

The course authors: Andrey Karpov (karpov@viva64.com), Evgeniy Ryzhkov (evg@viva64.com).

The rightholder of the course "Lessons on development of 64-bit C/C++ applications" is OOO "Program Verification Systems". The company develops software in the sphere of source program code analysis. The company's site.